- 9 Command Line Tools for Browsing Websites and Downloading Files in Linux

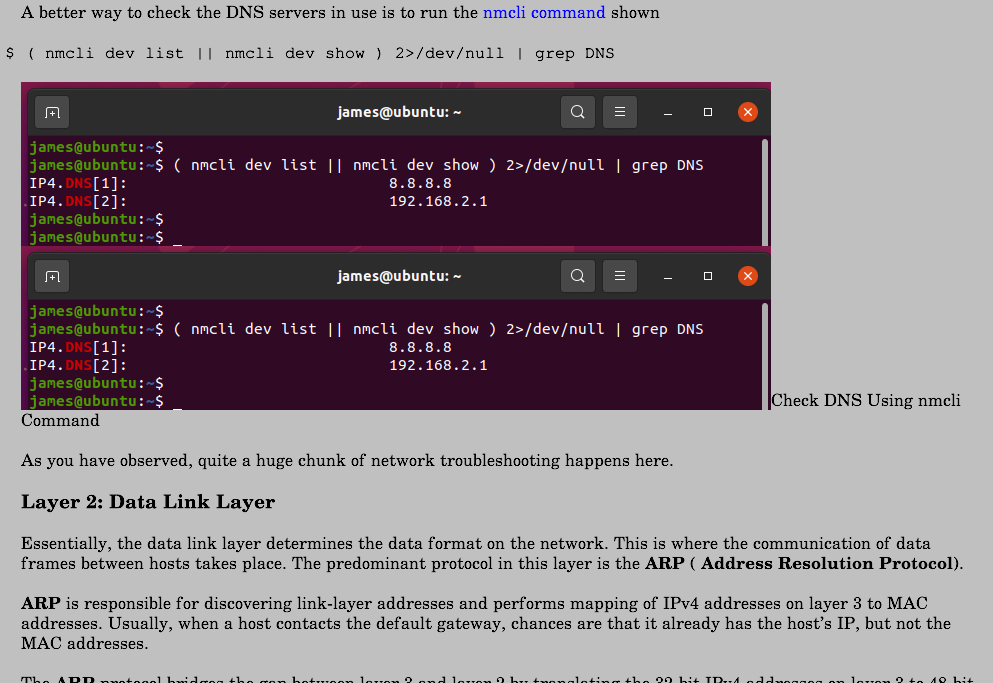

- 1. links

- 2. links2

- 3. lynx

- 4. youtube-dl

- 5. fetch

- 6. Axel

- 7. aria2

- 8. w3m

- 9. Browsh

- 2 Ways to Download Files From Linux Terminal

- Download files from Linux terminal using wget command

- Installing wget

- Download a file or webpage using wget

- Download files with a different name using wget

- Download a folder using wget

- Download an entire website using wget

- Bonus Tip: Resume incomplete downloads

- Download files from Linux command line using curl

- Installing curl

- Download files or webpage using curl

- Download files with a different name

- Pause and resume download with curl

9 Command Line Tools for Browsing Websites and Downloading Files in Linux

In the last article, we have covered few useful tools like ‘rTorrent‘, ‘wget‘, ‘cURL‘, ‘w3m‘, and ‘Elinks‘. We got lots of responses to cover few other tools of the same genre, if you’ve missed the first part you can go through it.

This article aims to make you aware of several other Linux command Line browsing and downloading applications, which will help you browse and download files within the Linux shell.

1. links

Links is an open-source web browser written in C programming Language. It is available for all major platforms viz., Linux, Windows, OS X, and OS/2.

This browser is text-based as well as graphical. The text-based links web browser is shipped by most of the standard Linux distributions by default. If links not installed in your system by default you may install it from the repo. Elinks is a fork of links.

$ sudo apt install links (on Debian, Ubuntu, & Mint) $ sudo dnf install links (on Fedora, CentOS & RHEL) $ sudo pacman -S links (on Arch and Manjaro) $ sudo zypper install links (on OpenSuse)

After installing links, you can browse any websites within the terminal as shown below in the screencast.

Use UP and DOWN arrow keys to navigate. The right arrow Key on a link will redirect you to that link and the Left arrow key will bring you back to the last page. To QUIT press q.

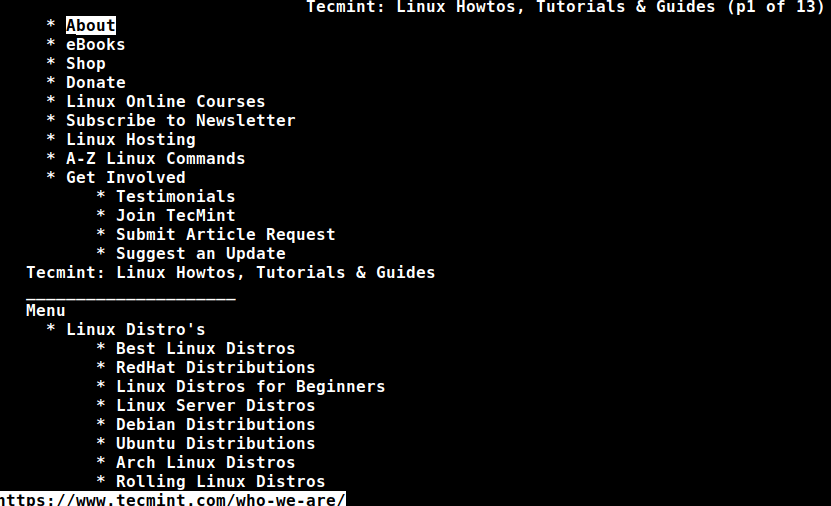

Here is how it seems to access Tecmint using the links tool.

If you are interested in installing the GUI of links, you may need to download the latest source tarball (i.e. version 2.22) from http://links.twibright.com/download/.

Alternatively, you may use the following wget command to download and install as suggested below.

$ wget http://links.twibright.com/download/links-2.22.tar.gz $ tar -xvf links-2.22.tar.gz $ cd links-2.22 $ ./configure --enable-graphics $ make $ sudo make install

Note: You need to install packages (libpng, libjpeg, TIFF library, SVGAlib, XFree86, C Compiler and make), if not already installed to successfully compile the package.

2. links2

Links2 is a graphical web browser version of Twibright Labs Links web browser. This browser has support for mouse and clicks. Designed especially for speed without any CSS support, fairly good HTML and JavaScript support with limitations.

To install links2 on Linux.

$ sudo apt install links2 (on Debian, Ubuntu, & Mint) $ sudo dnf install links2 (on Fedora, CentOS & RHEL) $ sudo pacman -S links2 (on Arch and Manjaro) $ sudo zypper install links2 (on OpenSuse)

To start links2 in command-line or graphical mode, you need to use the -g an option that displays the images.

$ links2 tecmint.com OR $ links2 -g tecmint.com

3. lynx

A text-based web browser released under GNU GPLv2 license and written in ISO C. lynx is a highly configurable web browser and Savior for many sysadmins. It has the reputation of being the oldest web browser that is being used and still actively developed.

To install lynx on Linux.

$ sudo apt install lynx (on Debian, Ubuntu, & Mint) $ sudo dnf install lynx (on Fedora, CentOS & RHEL) $ sudo pacman -S lynx (on Arch and Manjaro) $ sudo zypper install lynx (on OpenSuse)

After installing lynx, type the following command to browse the website as shown below in the screencast.

If you are interested in knowing a bit more about links and lynx web browser, you may like to visit the below link:

4. youtube-dl

youtube-dl is a platform-independent application that can be used to download videos from youtube and a few other sites. Written primarily in python and released under GNU GPL License, the application works out of the box. (Since youtube doesn’t allow you to download videos, it may be illegal to use it. Check the laws before you start using this.)

To install youtube-dl in Linux.

$ sudo apt install youtube-dl (on Debian, Ubuntu, & Mint) $ sudo dnf install youtube-dl (on Fedora, CentOS & RHEL) $ sudo pacman -S youtube-dl (on Arch and Manjaro) $ sudo zypper install youtube-dl (on OpenSuse)

After installing, try to download files from the Youtube site, as shown in the below screencast.

$ youtube-dl https://www.youtube.com/watch?v=ql4SEy_4xws

If you are interested in knowing more about youtube-dl you may like to visit the below link:

5. fetch

fetch is a command-line utility for a Unix-like operating system that is used for URL retrieval. It supports a lot of options like fetching ipv4 only address, ipv6 only address, no redirect, exit after successful file retrieval request, retry, etc.

Fetch can be Downloaded and installed from the link below

But before you compile and run it, you should install HTTP Fetcher. Download HTTP Fetcher from the link below.

6. Axel

Axel is a command-line-based download accelerator for Linux. Axel makes it possible to download a file at a much faster speed through a single connection request for multiple copies of files in small chunks through multiple http and FTP connections.

To install Axel in Linux.

$ sudo apt install axel (on Debian, Ubuntu, & Mint) $ sudo dnf install axel (on Fedora, CentOS & RHEL) $ sudo pacman -S axel (on Arch and Manjaro) $ sudo zypper install axel (on OpenSuse)

After axel installed, you may use the following command to download any given file, as shown in the screencast.

$ axel https://releases.ubuntu.com/20.04.2.0/ubuntu-20.04.2.0-desktop-amd64.iso

7. aria2

aria2 is a command-line-based download utility that is lightweight and supports multi-protocol (HTTP, HTTPS, FTP, BitTorrent, and Metalink). It can use meta link files to simultaneously download ISO files from more than one server. It can serve as a Bit torrent client as well.

To install aria2 in Linux.

$ sudo apt install aria2 (on Debian, Ubuntu, & Mint) $ sudo dnf install aria2 (on Fedora, CentOS & RHEL) $ sudo pacman -S aria2 (on Arch and Manjaro) $ sudo zypper install aria2 (on OpenSuse)

Once aria2 installed, you can fire up the following command to download any given file…

$ aria2c https://releases.ubuntu.com/20.04.2.0/ubuntu-20.04.2.0-desktop-amd64.iso

If you’re interested to know more about aria2 and its switches, read the following article.

8. w3m

w3m is another open-source text-based web browser very similar to lynx, which runs on a terminal. It uses emacs-w3m an Emacs interface for w3m to browse websites within the emacs interface.

To install w3m in Linux.

$ sudo apt install w3m (on Debian, Ubuntu, & Mint) $ sudo dnf install w3m (on Fedora, CentOS & RHEL) $ sudo pacman -S w3m (on Arch and Manjaro) $ sudo zypper install w3m (on OpenSuse)

After installing w3m, fire up the following command to browse the website as shown below.

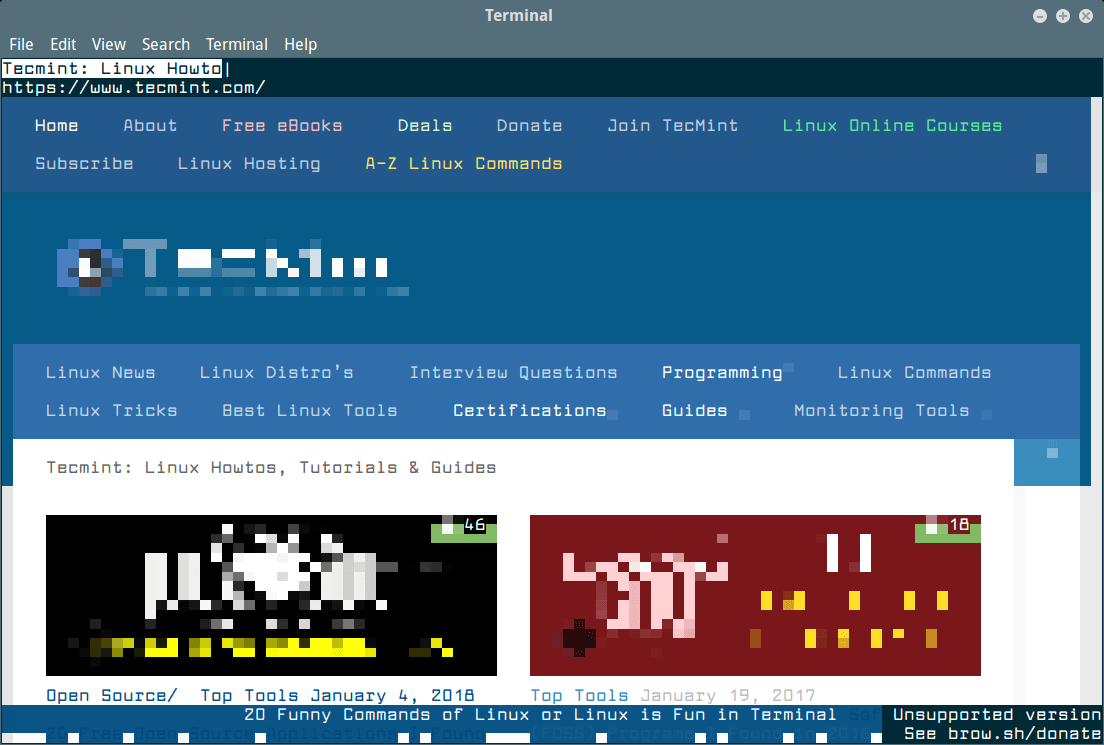

9. Browsh

Browsh is a modern text-based browser that shows anything like a modern browser does such as HTML5, CSS3, JS, video, and even WebGL. Its major role is to be run on a remote server via SSH or Mosh and browse the web pages as text from the terminal by significantly reducing bandwidth and increase browsing speed.

It means the server downloads the web pages and utilizes the minimum bandwidth of an SSH connection to show the web page results. However, standard text-based browsers lack JS and all other HTML5 support.

To install Browsh on Linux, you need to download a binary package and install it using the package manager.

That’s all for now. I’ll be here again with another interesting topic you people will love to read. Till then stay tuned and connected to Tecmint. Don’t forget to provide us with your valuable feedback in the comments below. Like and share us and help us get spread.

2 Ways to Download Files From Linux Terminal

If you are stuck to the Linux terminal, say on a server, how do you download a file from the terminal?

There is no download command in Linux but there are a couple of Linux commands for downloading file.

In this terminal trick, you’ll learn two ways to download files using the command line in Linux.

I am using Ubuntu here but apart from the installation, the rest of the commands are equally valid for all other Linux distributions.

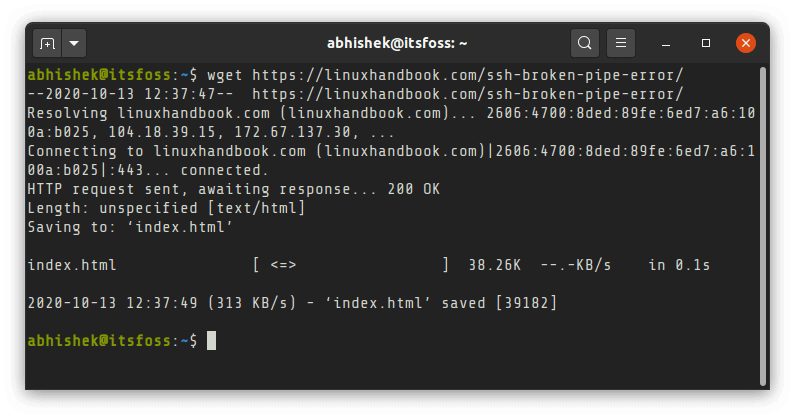

Download files from Linux terminal using wget command

wget is perhaps the most used command line download manager for Linux and UNIX-like systems. You can download a single file, multiple files, an entire directory, or even an entire website using wget.

wget is non-interactive and can easily work in the background. This means you can easily use it in scripts or even build tools like uGet download manager.

Let’s see how to use wget to download files from terminal.

Installing wget

Most Linux distributions come with wget preinstalled. It is also available in the repository of most distributions and you can easily install it using your distribution’s package manager.

On Ubuntu and Debian based distributions, you can use the apt package manager command:

Download a file or webpage using wget

You just need to provide the URL of the file or webpage. It will download the file with its original name in the directory you are in.

To download multiple files, you’ll have to save their URLs in a text file and provide that text file as input to wget like this:

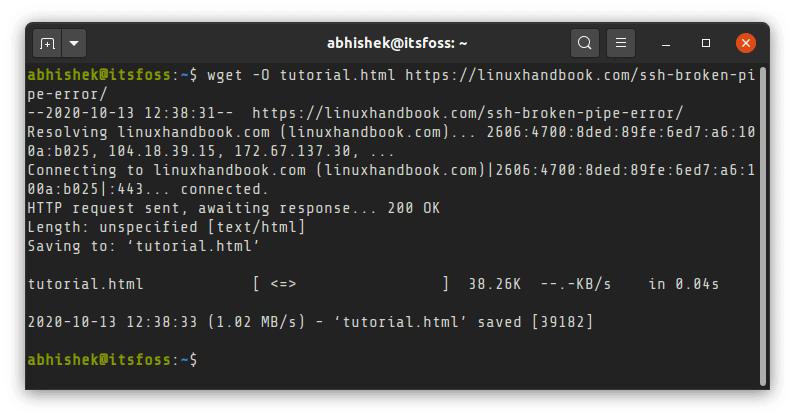

Download files with a different name using wget

You’ll notice that a webpage is almost always saved as index.html with wget. It will be a good idea to provide custom name to downloaded file.

You can use the -O (uppercase O) option to provide the output filename while downloading.

Download a folder using wget

Suppose you are browsing an FTP server and you need to download an entire directory, you can use the recursive option

wget -r ftp://server-address.com/directoryDownload an entire website using wget

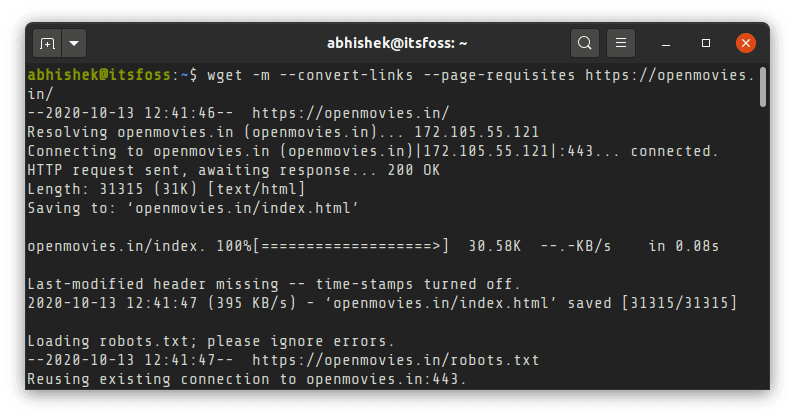

Yes, you can totally do that. You can mirror an entire website with wget. By downloading an entire website I mean the entire public facing website structure.

While you can use the mirror option -m directly, it will be a good idea add:

- –convert-links : links are converted so that internal links are pointed to downloaded resource instead of web

- –page-requisites: downloads additional things like style sheets so that the pages look better offline

wget -m --convert-links --page-requisites website_addressBonus Tip: Resume incomplete downloads

If you aborted the download by pressing C for some reasons, you can resume the previous download with option -c.

Download files from Linux command line using curl

Like wget, curl is also one of the most popular commands to download files in Linux terminal. There are so many ways to use curl extensively but I’ll focus on only the simple downloading here.

Installing curl

Though curl doesn’t come preinstalled, it is available in the official repositories of most distributions. You can use your distribution’s package manager to install it.

To install curl on Ubuntu and other Debian based distributions, use the following command:

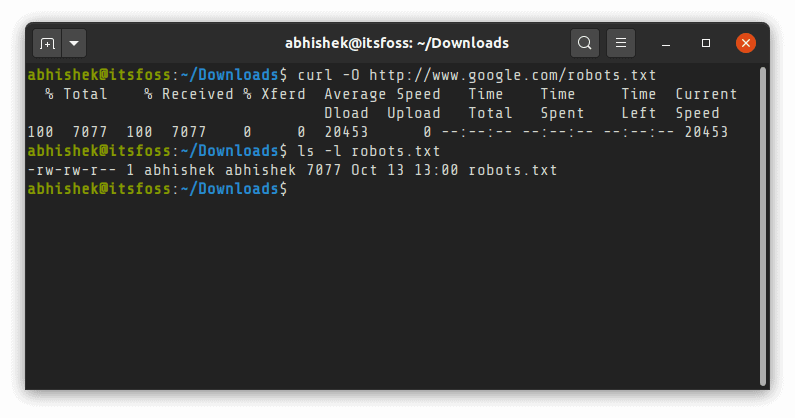

Download files or webpage using curl

If you use curl without any option with a URL, it will read the file and print it on the terminal screen.

To download a file using curl command in Linux terminal, you’ll have to use the -O (uppercase O) option:

It is simpler to download multiple files in Linux with curl. You just have to specify multiple URLs:

Keep in mind that curl is not as simple as wget. While wget saves webpages as index.html, curl will complain of remote file not having a name for webpages. You’ll have to save it with a custom name as described in the next section.

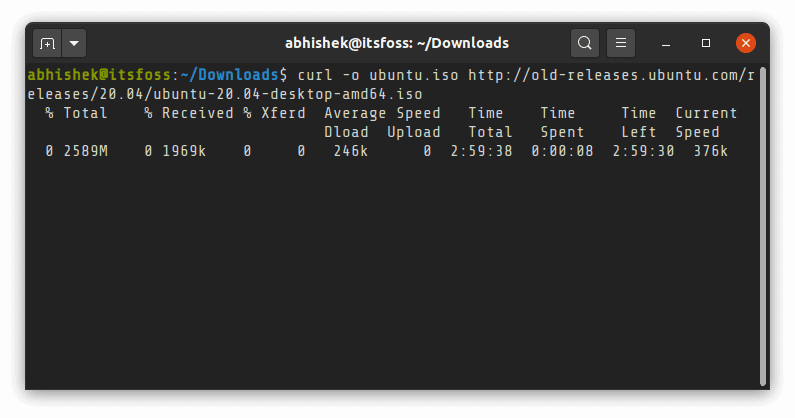

Download files with a different name

It could be confusing but to provide a custom name for the downloaded file (instead of the original source name), you’ll have to use -o (lowercase O) option:

Some times, curl wouldn’t just download the file as you expect it to. You’ll have to use option -L (for location) to download it correctly. This is because some times the links redirect to some other link and with option -L, it follows the final link.

Pause and resume download with curl

Like wget, you can also resume a paused download using curl with option -c:

As always, there are multiple ways to do the same thing in Linux. Downloading files from the terminal is no different.

wget and curl are just two of the most popular commands for downloading files in Linux. There are more such command line tools. Terminal based web-browsers like elinks, w3m etc can also be used for downloading files in command line.

Personally, for a simple download, I prefer using wget over curl. It is simpler and less confusing because you may have a difficult time figuring out why curl could not download a file in the expected format.

Your feedback and suggestions are welcome.