Fastest Linux Filesystem on Shingled Disks

There is considerable interest in shingled drives. These put data tracks so close together that you can’t write to one track without clobbering the next. This may increase capacity by 20% or so, but results in write amplification problems. There is work underway on filesystems optimised for Shingled drives, for example see: https://lwn.net/Articles/591782/ Some shingled disks such as the Seagate 8TB archive have a cache area for random writes, allowing decent performance on generic filesystems. The disk can even be quite fast on some common workloads, up to round 200MB/sec writes. However, it is to be expected that if the random write cache overflows, the performance may suffer. Presumably, some filesystems are better at avoiding random writes in general, or patterns of random writes likely to overflow the write cache found in such drives. Is a mainstream filesystem in the linux kernel better at avoiding the performance penalty of shingled disks than ext4?

There are 2 types of shingled disks in the market right now. Those that need a supported OS like the HGST 10TB disks vs those that do not need specific OS support like the Seagate 8TB Archive. Which are you referring to?

SMR as implemented in current drives does not result in «write amplification problems like SSDs». They only operate in very few ways vaguely like SSDs.

2 Answers 2

Intuitively Copy-on-Write and Log structured filesystems might give better performance on shingled disks by reducing reduce random writes. The benchmarks somewhat support this, however, these differences in performance are not specific to shingled disks. They also occur on an unshingled disk used as a control. Thus the switching to a shingled disk might not have much relevance to your choice of filesystem.

The nilfs2 filesystem gave quite good performance on SMR disk. However, this was because I allocated the whole 8TB partition, and the benchmark only wrote ~0.5TB so the nilfs cleaner did not have to run. When I limited the partition to 200GB the nilfs benchmarks did not even complete successfully. Nilfs2 may be a good choice performance-wise if you really use the «archive» disk as an archive disk where you keep all the data and snapshots written to the disk forever, as then then nilfs cleaner does not have to run.

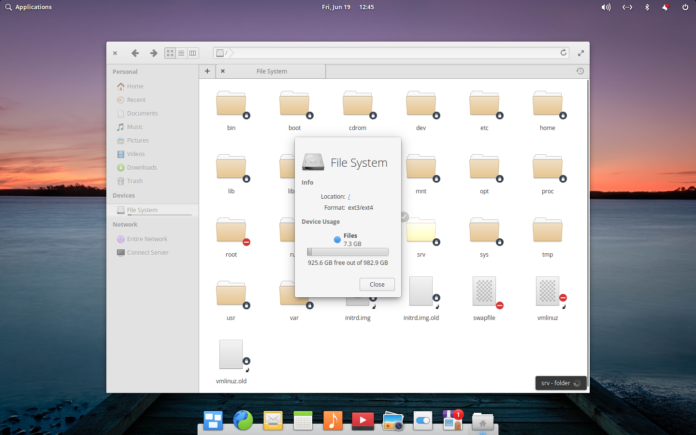

I understand that the 8TB seagate ST8000AS0002-1NA17Z drive I used for the test has a ~20GB cache area. I made changed the default filebench fileserver settings so that the benchmarks set would be ~125GB, larger than the unshingled cache area:

set $meanfilesize=1310720 set $nfiles=100000 run 36000 Now for the actual data. The number of ops measures the «overall» fileserver performance while the ms/op measures the latency of the random append, and could be used as a rough guide to the performance of random writes.

$ grep rand *0.out | sed s/.0.out:/\ / |sed 's/ - /-/g' | column -t SMR8TB.nilfs appendfilerand1 292176ops 8ops/s 0.1mb/s 1575.7ms/op 95884us/op-cpu [0ms - 7169ms] SMR.btrfs appendfilerand1 214418ops 6ops/s 0.0mb/s 1780.7ms/op 47361us/op-cpu [0ms-20242ms] SMR.ext4 appendfilerand1 172668ops 5ops/s 0.0mb/s 1328.6ms/op 25836us/op-cpu [0ms-31373ms] SMR.xfs appendfilerand1 149254ops 4ops/s 0.0mb/s 669.9ms/op 19367us/op-cpu [0ms-19994ms] Toshiba.btrfs appendfilerand1 634755ops 18ops/s 0.1mb/s 652.5ms/op 62758us/op-cpu [0ms-5219ms] Toshiba.ext4 appendfilerand1 466044ops 13ops/s 0.1mb/s 270.6ms/op 23689us/op-cpu [0ms-4239ms] Toshiba.xfs appendfilerand1 368670ops 10ops/s 0.1mb/s 195.6ms/op 19084us/op-cpu [0ms-2994ms] Since the Seagate is 5980RPM one might naively expect the Toshiba to be 20% faster. These benchmarks show it as being roughly 3 times (200%) faster, so these benchmarks are hitting the shingled performance penalty. We see Shingled (SMR) disk still can’t match the performance ext4 with on a unshingled (PMR) disk. The best performance was with nilfs2 with a 8TB partition (so the cleaner didn’t need to run), but even then it was significantly slower than the Toshiba with ext4.

To make the benchmarks above more clear, it might might help to normalise them relative to the performance of ext4 on each disk:

ops randappend SMR.btrfs: 1.24 0.74 SMR.ext4: 1 1 SMR.xfs: 0.86 1.98 Toshiba.btrfs: 1.36 0.41 Toshiba.ext4: 1 1 Toshiba.xfs: 0.79 1.38 We see that on the SMR disk btrfs has most of the advantage on overall ops that it has on ext4, but penalty on random appends is not as dramatic as a ratio. This might lead one to move to btrfs on the SMR disk. On the other hand, if you need low latency random appends, this benchmark suggests you want xfs, especially on SMR. We see that while SMR/PMR might influence your choice of filesystem, considering the workload your are optimising for seems more important.

I also ran an attic based benchmark. The durations of the attic runs (on the 8TB SMR full disk partitions) were:

ext4: 1 days 1 hours 19 minutes 54.69 seconds btrfs: 1 days 40 minutes 8.93 seconds nilfs: 22 hours 12 minutes 26.89 seconds In each case the attic repositories had the following stats:

Original size Compressed size Deduplicated size This archive: 1.00 TB 639.69 GB 515.84 GB All archives: 901.92 GB 639.69 GB 515.84 GB What is Ext4? Linux’s Fast Native File System

Before we answer the question posed by this article, a bit of background will be required. Ext4 is only the latest, albeit not particularly new, iteration of the venerable Linux Extended file system (Extfs).

What is Ext4?

Ext4 started as a set of extensions to, yes, you guessed it–Ext3. Ext3 was itself an evolution of Ext2, which was the offspring of Extfs, the original Linux-specific file system. Extfs was created by Rémy Card and others to overcome the limitations of the MINIX file system employed by Linus Torvalds in the first Linux kernel. MINIX (1987), written by Andrew S. Tanenbaum, is a Unix-like operating system. The man wrote it for the benefit of higher education, so that UNIX could be taught without paying a considerable sum for the real deal. As mentioned, the MINIX file system has limitations, hence Extfs was “extending” it.

Ext4 was added to the Linux kernel in 2006 (Ext4dev) and the repositories in 2008. It’s what’s known as a journaling file system (as of Ext3), i.e. a list of impending and implemented changes is maintained. It’s a bit different than your diary in that its most recent entries describe what will happen, rather than what has. A combination day planner/journal if you will. Journaling (which is optional) makes it possible to quickly repair file system errors without using a utility (e2fsck) to traverse the entire data structure in search of wackiness.

Ext4 is a standard root/tree file system, with a boot sector, partition table, and like the Unix File System, uses inodes (index nodes) to describe files and objects. It offers transparent encryption, uses checksums on the metadata (journal and other), supports TRIM, and implements delayed allocation.

Delayed allocation means that Ext4 caches data until it can determine the quickest and most efficient way to write it. This is a boon to performance, but introduces the possibility that data might not be written in the case of power failure. Journaling mitigates the danger of data loss in this scenario.

Beyond that, Ext4 uses 48-bit addressing that allows up to an exbibyte (EiB) of data. 12-bit “extents” which describe large chunks of contiguous space augment Ext3’s 32-bit direct and indirect block addressing. The maximum single file size in Ext4 is 1 tebibyte (TiB), and the maximum number of files is 4 billion.

Note: When it comes to Tebibytes versus Terabytes et al, numbers whose names share the same first two letters (“ex”, “gi”, “pe”, etc.) are roughly equivalent in size. E.g., a gigabyte (10^9) is 1,000,000,000 bytes while 1 gibibyte (2^30) is 1,073,741,824 bytes. Remember them as “BI”nary and b”A”se 10.

In short, the main advantages and disadvantages of Ext4 are…

The Upside

- A long successful track record

- Very good performance

- Handles up to an 1 exbibyte (EiB) of data (1 million tebibytes)

- Journaling operations make error recovery quick

- Backwards compatible with Ext3

The Downside

- Lacks newer techniques such as copy on write

- No integrated volume manager as with Btrfs and OpenZFS

- Not native Windows support. (Third-party drivers are available. Free and from Paragon software)

When to use Ext4

As Ext4 is fast, tried, and true, not to mention the default file system for Linux, it’s perfectly fine for end-user fans of Tux. For NAS, servers, or any situation where fault tolerance and data integrity are more important than speed, Btrfs or OpenZFS could be better choices.

What is the most high-performance Linux filesystem for storing a lot of small files (HDD, not SSD)?

I have a directory tree that contains many small files, and a small number of larger files. The average size of a file is about 1 kilobyte. There are 210158 files and directories in the tree (this number was obtained by running find | wc -l ). A small percentage of files gets added/deleted/rewritten several times per week. This applies to the small files, as well as to the (small number of) larger files. The filesystems that I tried (ext4, btrfs) have some problems with positioning of files on disk. Over a longer span of time, the physical positions of files on the disk (rotating media, not solid state disk) are becoming more randomly distributed. The negative consequence of this random distribution is that the filesystem is getting slower (such as: 4 times slower than a fresh filesystem). Is there a Linux filesystem (or a method of filesystem maintenance) that does not suffer from this performance degradation and is able to maintain a stable performance profile on a rotating media? The filesystem may run on Fuse, but it needs to be reliable.

If you know which files are going to be big/not changing very often, and which are going to be small/frequently changing, you might want to create two filesystems with different options on them, more suited to each scenario. If you need them to be accessible as they were a part of the same structure, you can do some tricks with mount, symlinks.

I am quiet surprised to know that btrfs(with copy-on-write feature) has been sluggish to you over a period of time. I am curious to have the results shared from you, possibly helping each other in new direction of performance tuning with it.

there is a new animal online zfs on Linux, available in native mode and fuse implementations, incase you wanted to have a look.

I tried zfs on linux once, was quite unstable. Managed to completely lock up the filesystem quite often. Box would work, but any access to the FS would hang.