- How can I determine the full CUDA version + subversion?

- 2 Answers 2

- How to Get the Version of CUDA Installed on Linux

- Topic of Contents:

- Getting the Max Supported Version Number of CUDA on Linux

- Getting the Version Number of Installed CUDA on Linux

- Conclusion

- References:

- About the author

- Shahriar Shovon

- How to Check CUDA Version Easily

- Prerequisite

- What is CUDA?

- Method 1 — Use nvcc to check CUDA version

- What is nvcc?

- Method 2 — Check CUDA version by nvidia-smi from NVIDIA Linux driver

- What is nvidia-smi?

- Method 3 — cat /usr/local/cuda/version.txt

- 3 ways to check CUDA version

How can I determine the full CUDA version + subversion?

which is quite useful. However, as of CUDA 11.1, this file no longer exists. How can I determine, on Linux and from the command line, and inspecting /path/to/cuda/toolkit , which exact version I’m looking at? Including the subversion?

2 Answers 2

(Answer due to @RobertCrovella’s comment)

/path/to/cuda/toolkit/bin/nvcc --version | egrep -o "V9+.1+.6+" | cut -c2- And of course, for the CUDA version currently chosen and configured to be used, just take the nvcc that’s on the path:

nvcc --version | egrep -o "V9+.8+.6+" | cut -c2- For example: You would get 11.2.67 for the download of CUDA 11.2 which was available this week on the NVIDIA website.

The full nvcc —version output would be:

nvcc: NVIDIA (R) Cuda compiler driver Copyright (c) 2005-2020 NVIDIA Corporation Built on Mon_Nov_30_19:08:53_PST_2020 Cuda compilation tools, release 11.2, V11.2.67 Build cuda_11.2.r11.2/compiler.29373293_0 The following python code works well for both Windows and Linux and I have tested it with a variety of CUDA (8-11.2, most of them).

It searches for the cuda_path, via a series of guesses (checking environment vars, nvcc locations or default installation paths) and then grabs the CUDA version from the output of nvcc —version . Doesn’t use @einpoklum’s style regexp, it simply assumes there is only one release string within the output of nvcc —version , but that can be simply checked.

You can also just use the first function, if you have a known path to query.

Adding it as an extra of @einpoklum answer, does the same thing, just in python.

import glob import os from os.path import join as pjoin import subprocess import sys def get_cuda_version(cuda_home): """Locate the CUDA version """ version_file = os.path.join(cuda_home, "version.txt") try: if os.path.isfile(version_file): with open(version_file) as f: version_str = f.readline().replace('\n', '').replace('\r', '') return version_str.split(" ")[2][:4] else: version_str = subprocess.check_output([os.path.join(cuda_home,"bin","nvcc"),"--version"]) version_str=str(version_str).replace('\n', '').replace('\r', '') idx=version_str.find("release") return version_str[idx+len("release "):idx+len("release ")+4] except: raise RuntimeError("Cannot read cuda version file") def locate_cuda(): """Locate the CUDA environment on the system Returns a dict with keys 'home', 'include' and 'lib64' and values giving the absolute path to each directory. Starts by looking for the CUDA_HOME or CUDA_PATH env variable. If not found, everything is based on finding 'nvcc' in the PATH. """ # Guess #1 cuda_home = os.environ.get('CUDA_HOME') or os.environ.get('CUDA_PATH') if cuda_home is None: # Guess #2 try: which = 'where' if IS_WINDOWS else 'which' nvcc = subprocess.check_output( [which, 'nvcc']).decode().rstrip('\r\n') cuda_home = os.path.dirname(os.path.dirname(nvcc)) except subprocess.CalledProcessError: # Guess #3 if IS_WINDOWS: cuda_homes = glob.glob( 'C:/Program Files/NVIDIA GPU Computing Toolkit/CUDA/v*.*') if len(cuda_homes) == 0: cuda_home = '' else: cuda_home = cuda_homes[0] else: cuda_home = '/usr/local/cuda' if not os.path.exists(cuda_home): cuda_home = None version = get_cuda_version(cuda_home) cudaconfig = if not all([os.path.exists(v) for v in cudaconfig.values()]): raise EnvironmentError( 'The CUDA path could not be located in $PATH, $CUDA_HOME or $CUDA_PATH. ' 'Either add it to your path, or set $CUDA_HOME or $CUDA_PATH.') return cudaconfig, version CUDA, CUDA_VERSION = locate_cuda() How to Get the Version of CUDA Installed on Linux

CUDA is a programming language for NVIDIA GPUs. It is used to speed up complex calculations using NVIDIA GPUs, i.e. Artificial Intelligence programs.

In this article, we will show you how to find the version of CUDA which is supported by the NVIDIA GPU drivers that is installed on Linux. We will also show you how to find the version number of CUDA that is installed on your Linux computer.

Topic of Contents:

Getting the Max Supported Version Number of CUDA on Linux

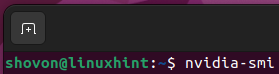

If you want to know the CUDA versions that the currently installed NVIDIA drivers of your Linux computer support, open a “Terminal” app and run the following command:

In the given output of the command, you will see the version number of the NVIDIA driver that you installed on your Linux computer[1]. You will also see the maximum version of CUDA that the installed version of the NVIDIA driver supports [2] .

In this example, the NVIDIA driver version 525.105.17 supports the CUDA version 12.0 or lower.

Getting the Version Number of Installed CUDA on Linux

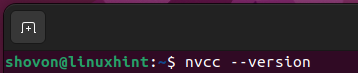

To find the version number of CUDA that is installed on your Linux computer, open a “Terminal” app and run the following command:

The version number of CUDA installed on your Linux computer should be displayed.

In this example, the CUDA version that is installed on our Ubuntu machine is v11.5.119.

Conclusion

We showed you how to find out the versions of CUDA that are supported by the currently installed NVIDIA GPU drivers of your Linux computer. We also showed you how to find the version number of CUDA that is

installed on your Linux computer.

References:

About the author

Shahriar Shovon

Freelancer & Linux System Administrator. Also loves Web API development with Node.js and JavaScript. I was born in Bangladesh. I am currently studying Electronics and Communication Engineering at Khulna University of Engineering & Technology (KUET), one of the demanding public engineering universities of Bangladesh.

How to Check CUDA Version Easily

Here you will learn how to check NVIDIA CUDA version in 3 ways: nvcc from CUDA toolkit, nvidia-smi from NVIDIA driver, and simply checking a file. Using one of these methods, you will be able to see the CUDA version regardless the software you are using, such as PyTorch, TensorFlow, conda (Miniconda/Anaconda) or inside docker.

Prerequisite

You should have NVIDIA driver installed on your system, as well as Nvidia CUDA toolkit, aka, CUDA, before we start. If you haven’t, you can install it by running sudo apt install nvidia-cuda-toolkit .

What is CUDA?

CUDA is a general parallel computing architecture and programming model developed by NVIDIA for its graphics cards (GPUs). Using CUDA, PyTorch or TensorFlow developers will dramatically increase the performance of PyTorch or TensorFlow training models, utilizing GPU resources effectively.

In GPU-accelerated technology, the sequential portion of the task runs on the CPU for optimized single-threaded performance, while the computed-intensive segment, like PyTorch technology, runs parallel via CUDA at thousands of GPU cores. When using CUDA, developers can write a few basic keywords in common languages such as C, C++ , Python, and implement parallelism.

Method 1 — Use nvcc to check CUDA version

If you have installed the cuda-toolkit software either from the official Ubuntu repositories via sudo apt install nvidia-cuda-toolkit , or by downloading and installing it manually from the official NVIDIA website, you will have nvcc in your path (try echo $PATH ) and its location will be /usr/bin/nvcc (by running which nvcc ).

To check CUDA version with nvcc, run

You can see similar output in the screenshot below. The last line shows you version of CUDA. The version here is 10.1. Yours may vary, and can be either 10.0, 10.1, 10.2 or even older versions such as 9.0, 9.1 and 9.2. After the screenshot you will find the full text output too.

vh@varhowto-com:~$ nvcc --version nvcc: NVIDIA (R) Cuda compiler driver Copyright (c) 2005-2019 NVIDIA Corporation Built on Sun_Jul_28_19:07:16_PDT_2019 Cuda compilation tools, release 10.1, V10.1.243 What is nvcc?

nvcc is the NVIDIA CUDA Compiler, thus the name. It is the key wrapper for the CUDA compiler suite. For other usage of nvcc , you can use it to compile and link both host and GPU code.

Check out nvcc ‘s manpage for more information.

Method 2 — Check CUDA version by nvidia-smi from NVIDIA Linux driver

The second way to check CUDA version is to run nvidia-smi, which comes from downloading the NVIDIA driver, specifically the NVIDIA-utils package. You can install either Nvidia driver from the official repositories of Ubuntu, or from the NVIDIA website.

$ which nvidia-smi /usr/bin/nvidia-smi $ dpkg -S /usr/bin/nvidia-smi nvidia-utils-440: /usr/bin/nvidia-smi

To check CUDA version with nvidia-smi , directly run

You can see similar output in the screenshot below. The version is at the top right of the output. Here’s my version is CUDA 10.2. You may have 10.0, 10.1 or even the older version 9.0 or 9.1 or 9.2 installed.

Importantly, except for CUDA version. There are more details in the nvidia-smi output, driver version (440.100), GPU name, GPU fan percentage, power consumption/capability, memory usage, can also be found here. You can also find the processes which use the GPU at the moment. This is helpful if you want to see if your model or system is using GPU such as PyTorch or TensorFlow.

Here is the full text output:

vh@varhowto-com:~$ nvidia-smi Tue Jul 07 10:07:26 2020 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 440.100 Driver Version: 440.100 CUDA Version: 10.2 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 GeForce GTX 1070 Off | 00000000:01:00.0 On | N/A | | 31% 48C P0 35W / 151W | 2807MiB / 8116MiB | 1% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | 0 1582 G /usr/lib/xorg/Xorg 262MiB | | 0 2481 G /usr/lib/xorg/Xorg 1646MiB | | 0 2686 G /usr/bin/gnome-shell 563MiB | | 0 3244 G …AAAAAAAAAAAACAAAAAAAAAA= --shared-files 319MiB | +-----------------------------------------------------------------------------+ What is nvidia-smi?

nvidia-smi (NVSMI) is NVIDIA System Management Interface program. It is also known as NVSMI. nvidia-smi provides monitoring and maintenance capabilities for all of tje Fermi’s Tesla, Quadro, GRID and GeForce NVIDIA GPUs and higher architecture families. For most functions, GeForce Titan Series products are supported with only little detail given for the rest of the Geforce range.

NVSMI is also a cross-platform application that supports both common NVIDIA driver-supported Linux distros and 64-bit versions of Windows starting with Windows Server 2008 R2. Metrics may be used directly by users via stdout, or stored via CSV and XML formats for scripting purposes.

For more information, check out the man page of nvidia-smi .

Method 3 — cat /usr/local/cuda/version.txt

cat /usr/local/cuda/version.txtNote that if you install Nvidia driver and CUDA from Ubuntu 20.04’s own official repository this approach may not work.

3 ways to check CUDA version

Time Needed : 5 minutes

There are basically three ways to check CUDA version. One must work if not the other.

- Perhaps the easiest way to check a file Run cat /usr/local/cuda/version.txt

Note: this may not work on Ubuntu 20.04