- Saved searches

- Use saved searches to filter your results more quickly

- mekb-turtle/imgfb

- Name already in use

- Sign In Required

- Launching GitHub Desktop

- Launching GitHub Desktop

- Launching Xcode

- Launching Visual Studio Code

- Latest commit

- Git stats

- Files

- README.md

- About

- How to write directly to linux framebuffer?

- Apr 04 — Writing To The Framebuffer

- Gradients

- Text

- Images

- Framebuffer Extras

- How to use OpenCL to write directly to linux framebuffer with zero-copy?

- 2 Answers 2

Saved searches

Use saved searches to filter your results more quickly

You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. Reload to refresh your session. You switched accounts on another tab or window. Reload to refresh your session.

Draws a farbfeld or jpeg image to the Linux framebuffer

mekb-turtle/imgfb

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Name already in use

A tag already exists with the provided branch name. Many Git commands accept both tag and branch names, so creating this branch may cause unexpected behavior. Are you sure you want to create this branch?

Sign In Required

Please sign in to use Codespaces.

Launching GitHub Desktop

If nothing happens, download GitHub Desktop and try again.

Launching GitHub Desktop

If nothing happens, download GitHub Desktop and try again.

Launching Xcode

If nothing happens, download Xcode and try again.

Launching Visual Studio Code

Your codespace will open once ready.

There was a problem preparing your codespace, please try again.

Latest commit

Git stats

Files

Failed to load latest commit information.

README.md

Draws a farbfeld or jpeg image to the Linux framebuffer

Usage: imgfb

: path to the image file to read (or write if reversed) or — for stdin (or stdout if reversed), can be farbfeld, jpeg or png

—framebuffer -f : framebuffer name, defaults to fb0

—offsetx -x —offsety -y : offset of image to draw, defaults to 0 0

—reverse -r : reads framebuffer and writes to a file (as farbfeld only) instead, offset does not work here

About

Draws a farbfeld or jpeg image to the Linux framebuffer

How to write directly to linux framebuffer?

Basically you open /dev/fb0, do some ioctls on it, then mmap it. Then you just write to the mmap’d area in your process.

Are you looking to write a device driver? If so check out this HowTo guide

Also read the file Documentation/fb/framebuffer.txt (and the adjoining docs for specific drivers) in the linux kernel tree.

I found this code on this other stackoverflow question. It draws a shaded pink rectangle to screen using the framebuffer and it worked straight away for me. You have to run it from a proper text terminal, not inside a graphical terminal.

#include #include #include #include #include #include #include int main() < int fbfd = 0; struct fb_var_screeninfo vinfo; struct fb_fix_screeninfo finfo; long int screensize = 0; char *fbp = 0; int x = 0, y = 0; long int location = 0; // Open the file for reading and writing fbfd = open("/dev/fb0", O_RDWR); if (fbfd == -1) < perror("Error: cannot open framebuffer device"); exit(1); >printf("The framebuffer device was opened successfully.\n"); // Get fixed screen information if (ioctl(fbfd, FBIOGET_FSCREENINFO, &finfo) == -1) < perror("Error reading fixed information"); exit(2); >// Get variable screen information if (ioctl(fbfd, FBIOGET_VSCREENINFO, &vinfo) == -1) < perror("Error reading variable information"); exit(3); >printf("%dx%d, %dbpp\n", vinfo.xres, vinfo.yres, vinfo.bits_per_pixel); // Figure out the size of the screen in bytes screensize = vinfo.xres * vinfo.yres * vinfo.bits_per_pixel / 8; // Map the device to memory fbp = (char *)mmap(0, screensize, PROT_READ | PROT_WRITE, MAP_SHARED, fbfd, 0); if ((int)fbp == -1) < perror("Error: failed to map framebuffer device to memory"); exit(4); >printf("The framebuffer device was mapped to memory successfully.\n"); x = 300; y = 100; // Where we are going to put the pixel // Figure out where in memory to put the pixel for (y = 100; y < 300; y++) for (x = 100; x < 300; x++) < location = (x+vinfo.xoffset) * (vinfo.bits_per_pixel/8) + (y+vinfo.yoffset) * finfo.line_length; if (vinfo.bits_per_pixel == 32) < *(fbp + location) = 100; // Some blue *(fbp + location + 1) = 15+(x-100)/2; // A little green *(fbp + location + 2) = 200-(y-100)/5; // A lot of red *(fbp + location + 3) = 0; // No transparency >else < //assume 16bpp int b = 10; int g = (x-100)/6; // A little green int r = 31-(y-100)/16; // A lot of red unsigned short int t = r> munmap(fbp, screensize); close(fbfd); return 0; > Apr 04 — Writing To The Framebuffer

I’ve been thinking about cross platform GUIs, and one question led to the next and eventually I was asking: how do pixels end up on the monitor? A pretty neat stack overflow response answers:

The image displayed on the monitor is stored in your computer’s video RAM on the graphics card in a structure called a framebuffer.

The data in the video RAM can be generated by the GPU or by the CPU. The video RAM is continuously read out by a specialized DMA component on the video card and sent to the monitor. The signal output to the monitor is either an analog signal (VGA) where the color components are sent through digital to analog converters before leaving the card, or a digital signal in the case of HDMI or DVI.

Now, you probably realize that for a 1920×1080 display with 4 bytes per pixel, you only need about 8 MB to store the image, but the video RAM in your computer is probably many times that size. This is because the video RAM is not only intended for storing the framebuffer. The video RAM is directly connected to the GPU, a special purpose processor designed for efficient 3D rendering and video decoding.

Which is super informative, and also explains why my monitors are directly plugged into my GPU via DVI. Another answer I found said no modern operating system will let you access the framebuffer directly. BUT LUCKILY THAT’S WRONG. With another stackexchange tip, it’s as easy as:

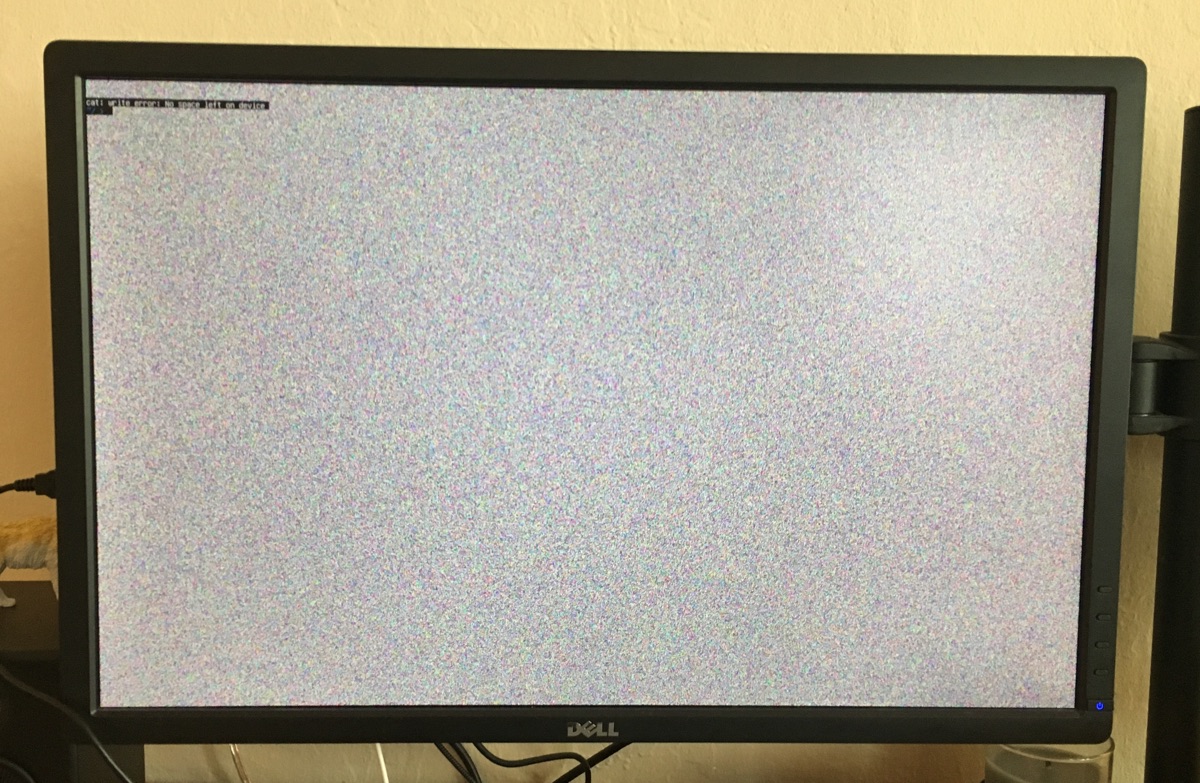

> cat /dev/urandom > /dev/fb0 -bash: /dev/fb0: Permission denied > sudo cat /dev/urandom > /dev/fb0 -bash: /dev/fb0: Permission denied > ls -al /dev/fb0 crw-rw---- 1 root video 29, 0 Apr 4 00:21 /dev/fb0 > sudo adduser seena video > cat /dev/urandom > /dev/fb0 Glossing over why sudo would mysteriously not work, this writes a bunch of noise out to the monitor:

Awesome! The kernel framebuffer docs have some general info, but the gist is /dev/fb0 acts like any other memory device in /dev and we can write to it like a file. Since it’s just a memory buffer, any write will overwrite the existing value, which you can see when the console prompt immeidately overwrites the noise.

Also, you can even read from the framebuffer, taking a screenshot with cp /dev/fb0 screenshot which would have been helpful before I took a bunch of pictures with my phone.

Gradients

Alright, so the stackexchange answer also gives a script to write out a red box, but I wanted to take a shot at a gradient. After permuting the following lines a bunch of times, I settled on: the monitor is written to one horizontal line at a time, with one byte for Blue,Green,Red,Alpha(?) per pixel (seems like 24-bit true color).

xsize = 1920 ysize = 1200 with open('data.out', 'wb') as f: for y in range(0,ysize): for x in range(0,xsize): r = int(min(x / (xsize/256),255)) g = int(min(y / (ysize/256),255)) b = 0 f.write((b).to_bytes(1, byteorder='little')) f.write((g).to_bytes(1, byteorder='little')) f.write((r).to_bytes(1, byteorder='little')) f.write((0).to_bytes(1, byteorder='little')) Text

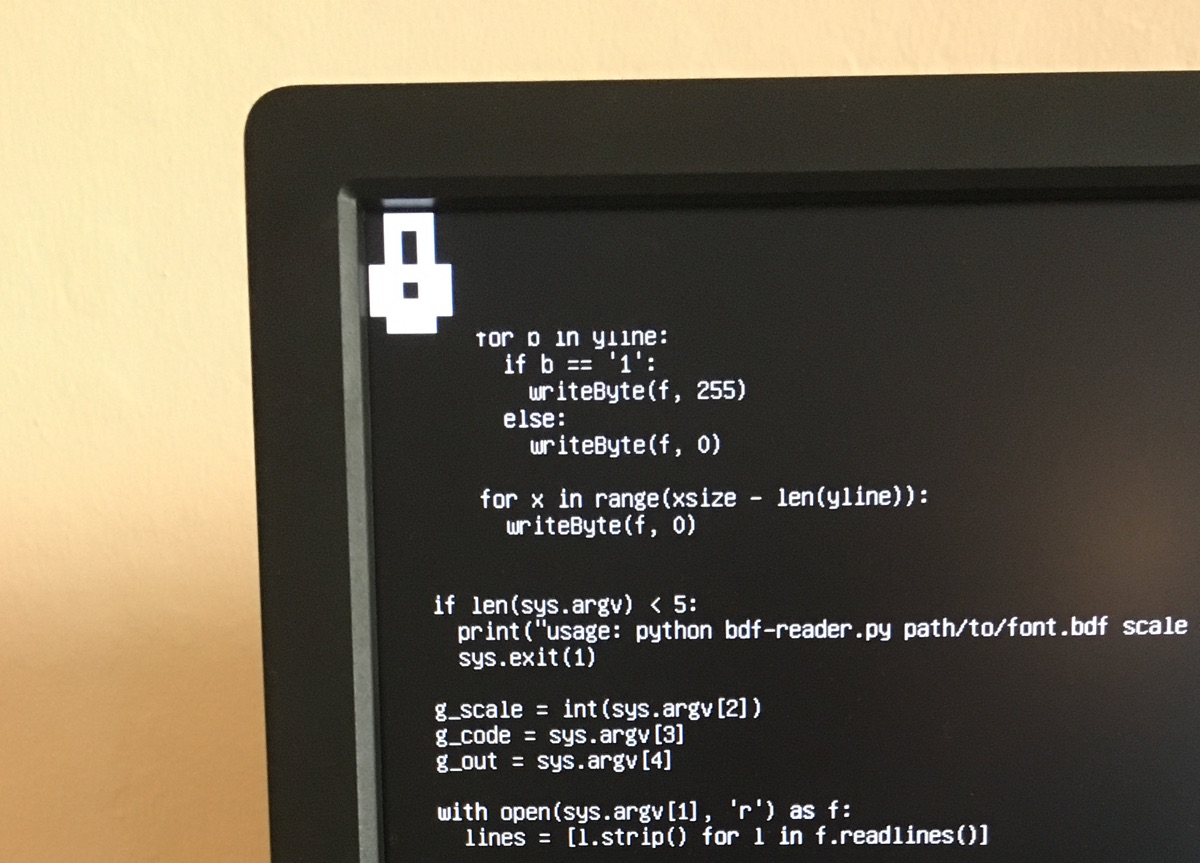

I’ve been running this from the linux console (rather than x server or another display server), which I believe is just rendering text and basic colors to the framebuffer. I should be able to do that too. I grabbed Lemon, a bitmap font I used to use. Bitmap fonts describe characters as a grid of bits, which can be easily rendered without much thought about the complexity/horrors of text rendering, aliasing and the likes.

I started a janky reader for BDF files, and then started to regret this idea and my python script. Nevertheless, I produced a single “lock” icon from the font, drawn to the framebuffer! This could be extended to write out a text string, but again, it was really janky.

Images

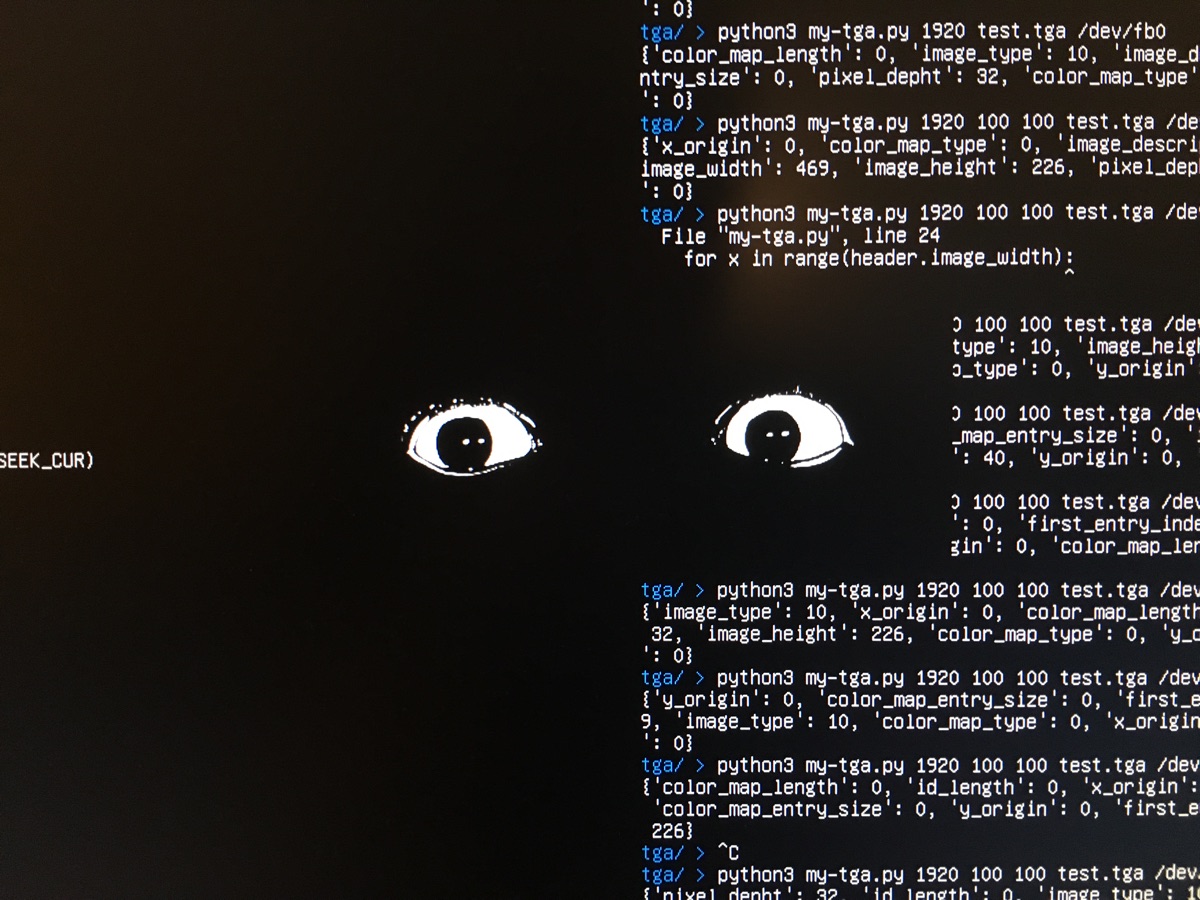

Okay so at this point, it’s not much of a stretch to render out an image to the framebuffer. We’ve already got a smooth color gradient and an image is just a file format we have to read pixel data to and encode/dump to the framebuffer. I exported an image as a TGA file (just an uncompressed format for easy parsing, also tried BMP), and used pyTGA to write the image straight out. What’s neat is you can use seek to skip over pixels, rather than having offset write out black pixels.

Here’s the little TGA renderer python script:

import sys import os import tga if len(sys.argv) 4: print("Usage: tga.py xsize xoff yoff input output") sys.exit(1) g_xsize = int(sys.argv[1]) g_xoff = int(sys.argv[2]) g_yoff = int(sys.argv[3]) g_input = sys.argv[4] g_output = sys.argv[5] img = tga.Image() img.load(g_input) header = img._header with open(g_output, 'wb') as f: f.seek(g_yoff * 4 * g_xsize, os.SEEK_CUR) for y in range(header.image_height): f.seek(g_xoff * 4, os.SEEK_CUR) for x in range(header.image_width): p = img.get_pixel(y,x) f.write(p[2].to_bytes(1, byteorder='little')) f.write(p[1].to_bytes(1, byteorder='little')) f.write(p[0].to_bytes(1, byteorder='little')) f.write((0).to_bytes(1, byteorder='little')) f.seek((g_xsize - header.image_width - g_xoff) * 4, os.SEEK_CUR) # Running in bash > python3 tga.py 1920 750 300 test.tga /dev/fb0 It works! Image rendered straight to the framebuffer

Framebuffer Extras

This has been super useful in connecting a bunch of loose threads I’ve had in my mind. It explains, for example, why the final step of OpenGL is rendering out to a framebuffer, and why double buffering (rendering to a hidden buffer, then swapping) helps (especially given how slow my python program is).

On the GUI side, From what I’ve found, a lot of custom GUI engines mostly just blit together bitmaps, and X11, along with many others (“MPlayer, links2, Netsurf, fbida,[2] and fim[3] and libraries such as GGI, SDL, GTK+, and Qt”) render out to the framebuffer. Some university classes even use Raspberry PIs to mess around with the framebuffer, and there’s some useful reading there because the architecture is very straightforward.

How to use OpenCL to write directly to linux framebuffer with zero-copy?

I am using OpenCL to do some image processing and want to use it to write RGBA image directly to framebuffer. Workflow is shown below: 1) map framebuffer to user space. 2) create OpenCL buffer using clCreateBuffer with flags of «CL_MEM_ALLOC_HOST_PTR» 3) use clEnqueueMapBuffer to map the results to framebuffer. However, it doesn’t work. Nothing on the screen. Then I found that the mapped virtual address from framebuffer are not same as the virtual address mapped OpenCL. Has any body done a zero-copy move of data from GPU to framebuffer?Any help on what approach should I use for this? Some key codes:

if ((fd_fb = open("/dev/fb0", O_RDWR, 0)) < 0) < printf("Unable to open /dev/fb0\n"); return -1; >fb0 = (unsigned char *)mmap(0, fb0_size, PROT_READ | PROT_WRITE, MAP_SHARED, fd_fb, 0); . cmDevSrc4 = clCreateBuffer(cxGPUContext, CL_MEM_READ_WRITE | CL_MEM_ALLOC_HOST_PTR, sizeof(cl_uchar) * imagesize * 4, NULL, &status); . fb0 = (unsigned char*)clEnqueueMapBuffer(cqCommandQueue, cmDevSrc4, CL_TRUE, CL_MAP_READ, 0, sizeof(cl_uchar) * imagesize * 4, 0, NULL, NULL, &ciErr); 2 Answers 2

For zero-copy with an existing buffer you need to use CL_MEM_USE_HOST_PTR flag in the clCreateBuffer() function call. In addition you need give the pointer to the existing buffer as second to last argument.

I don’t know how linux framebuffer internally works but it is possible that even with the zero-copy from device to host it leads to extra copying the data to GPU for rendering. So you might want to render the OpenCL buffer directly with OpenGL. Check out cl_khr_gl_sharing extension for OpenCL.

I don’t know OpenCL yet, I was just doing a search to find out about writing to the framebuffer from it and hit your post. Opening it and mmapping it like in your code looks good.

That doesn’t always work, it depends on the computer. I’m on an old Dell Latitude D530 and not only can’t I write to the framebuffer but there’s no GPU, so no advantage to using OpenCL over using the CPU. If you have a /dev/fb0 and you can get something on the screen with

Then you might have a chance from OpenCL. With a Mali at least there’s a way to pass a pointer from the CPU to the GPU. You may need to add some offset (true on a Raspberry Pi I think). And it could be double-buffered by Xorg, there are lots of reasons why it might not work.