- How to append contents of multiple files into one file

- 12 Answers 12

- how to merge 2 big files [closed]

- 3 Answers 3

- Can you merge two files without writing one file onto the other?

- Can we make this better than doubling the size of both files?

- Can we avoid using extra space?

- What about writing a little bit at a time, then deleting what we wrote?

- What about sparse files?

- How to Merge Multiple Files in Linux?

- Displaying the content together

- Merge multiple files in Linux and store them in another file

- Appending content to an existing file

- Using sed command to merge multiple files in Linux

- Automating the process using For loop

- Conclusion

How to append contents of multiple files into one file

and it did not work. I want my script to add the newline at the end of each text file. eg. Files 1.txt, 2.txt, 3.txt. Put contents of 1,2,3 in 0.txt How do I do it ?

12 Answers 12

You need the cat (short for concatenate) command, with shell redirection ( > ) into your output file

@blasto it depends. You would use >> to append one file onto another, where > overwrites the output file with whatever’s directed into it. As for the newline, is there a newline as the first character in file 1.txt ? You can find out by using od -c , and seeing if the first character is a \n .

@blasto You’re definitely heading in the right direction. Bash certainly accepts the form <. >for filename matching, so perhaps the quotes messed things up a bit in your script? I always try working with things like this using ls in a shell. When I get the command right, I just cut-n-paste it into a script as is. You might also find the -x option useful in your scripts — it will echo the expanded commands in the script before execution.

To maybe stop somebody from making the same mistake: cat 1.txt 2.txt > 1.txt will just override 1.txt with the content of 2.txt . It does not merge the two files into the first one.

Another option, for those of you who still stumble upon this post like I did, is to use find -exec :

find . -type f -name '*.txt' -exec cat <> + >> output.file In my case, I needed a more robust option that would look through multiple subdirectories so I chose to use find . Breaking it down:

Look within the current working directory.

Only interested in files, not directories, etc.

Whittle down the result set by name

Execute the cat command for each result. «+» means only 1 instance of cat is spawned (thx @gniourf_gniourf)

As explained in other answers, append the cat-ed contents to the end of an output file.

There are lots of flaws in this answer. First, the wildcard *.txt must be quoted (otherwise, the whole find command, as written, is useless). Another flaw comes from a gross misconception: the command that is executed is not cat >> 0.txt <> , but cat <> . Your command is in fact equivalent to < find . -type f -name *.txt -exec cat '<>‘ \; ; > >> 0.txt (I added grouping so that you realize what’s really happening). Another flaw is that find is going to find the file 0.txt , and cat will complain by saying that input file is output file.

Thanks for the corrections. My case was a little bit different and I hadn’t thought of some of those gotchas as applied to this case.

You should put >> output.file at the end of your command, so that you don’t induce anybody (including yourself) into thinking that find will execute cat <> >> output.file for every found file.

Starting to look really good! One final suggestion: use -exec cat <> + instead of -exec cat <> \; , so that only one instance of cat is spawned with several arguments ( + is specified by POSIX).

Good answer and word of warning — I modified mine to: find . -type f -exec cat <> + >> outputfile.txt and couldn’t figure out why my output file wouldn’t stop growing into the gigs even though the directory was only 50 megs. It was because I kept appending outputfile.txt to itself! So just make sure to name that file correctly or place it in another directory entirely to avoid this.

if you have a certain output type then do something like this

cat /path/to/files/*.txt >> finalout.txt Keep in mind that you are losing the possibility to maintain merge order though. This may affect you if you have your files named, eg. file_1 , file_2 , … file_11 , because of the natural order how files are sorted.

If all your files are named similarly you could simply do:

If all your files are in single directory you can simply do

Files 1.txt,2.txt, .. will go into 0.txt

Already answered by Eswar. Keep in mind that you are losing the possibility to maintain merge order though. This may affect you if you have your files named, eg. file_1 , file_2 , … file_11 , because of the natural order how files are sorted.

for i in ; do cat "$i.txt" >> 0.txt; done I found this page because I needed to join 952 files together into one. I found this to work much better if you have many files. This will do a loop for however many numbers you need and cat each one using >> to append onto the end of 0.txt.

as brought up in the comments:

sed r 1.txt 2.txt 3.txt > merge.txt sed h 1.txt 2.txt 3.txt > merge.txt sed -n p 1.txt 2.txt 3.txt > merge.txt # -n is mandatory here sed wmerge.txt 1.txt 2.txt 3.txt Note that last line write also merge.txt (not wmerge.txt !). You can use w»merge.txt» to avoid confusion with the file name, and -n for silent output.

Of course, you can also shorten the file list with wildcards. For instance, in case of numbered files as in the above examples, you can specify the range with braces in this way:

if your files contain headers and you want remove them in the output file, you can use:

for f in `ls *.txt`; do sed '2,$!d' $f >> 0.out; done All of the (text-) files into one

find . | xargs cat > outfile xargs makes the output-lines of find . the arguments of cat.

find has many options, like -name ‘*.txt’ or -type.

you should check them out if you want to use it in your pipeline

You should explain what your command does. Btw, you should use find with —print0 and xargs with -0 in order to avoid some caveats with special filenames.

If the original file contains non-printable characters, they will be lost when using the cat command. Using ‘cat -v’, the non-printables will be converted to visible character strings, but the output file would still not contain the actual non-printables characters in the original file. With a small number of files, an alternative might be to open the first file in an editor (e.g. vim) that handles non-printing characters. Then maneuver to the bottom of the file and enter «:r second_file_name». That will pull in the second file, including non-printing characters. The same could be done for additional files. When all files have been read in, enter «:w». The end result is that the first file will now contain what it did originally, plus the content of the files that were read in.

Send multi file to a file(textall.txt):

how to merge 2 big files [closed]

Suppose I have 2 files with size of 100G each. And I want to merge them into one, and then delete them. In linux we can use cat file1 file2 > final_file But that needs to read 2 big files, and then write a bigger file. Is it possible just append one file to the other, so that no IO is required? Since metadata of file contains the location of the file, and the length, I am wondering whether it is possible to change the metadata of the file to do the merge, so no IO will happen.

Someone who finds their way to this questions might find «hungrycat» useful. hungrycat prints contents of a file on the standard output, while simultaneously freeing disk space occupied by the file: github.com/jwilk/hungrycat

3 Answers 3

Can you merge two files without writing one file onto the other?

Only in obscure theory. Since disk storage is always based on blocks and filesystems therefore store things on block boundaries, you could only append one file to another without rewriting if the first file ended perfectly on a block boundary. There are some rare filesystem configurations that use tail packing, but that would only help if the first file where already using the tail block of the previous file.

Unless that perfect scenario occurs or your filesystem is able to mark a partial block in the middle of the file (I’ve never heard of this), this won’t work. Just to kick the edge case around, there’s also no way outside of changing the kernel interace to make such a call (re: Link to a specific inode)

Can we make this better than doubling the size of both files?

Yes, we can use the append ( >> ) operation instead.

That will still result in using all the space of consumed by file2 twice over until we can delete it.

Can we avoid using extra space?

No. Unless somebody comes back with something I don’t know, you’re basically out of luck there. It’s possible to truncate a file, forgetting about the existence of the end of it, but there is no way to forget about the existence of the start unless we get back to modifying inodes directly and having to alter the kernel interface to the filesystem since that’s definitely not a a POSIX operation.

What about writing a little bit at a time, then deleting what we wrote?

No again. Since we can’t chop the start of a file off, we’d have to rewrite everything from the point of interest all the way to the end of the file. This would be very costly for IO and only useful after we’ve already read half the file.

What about sparse files?

Maybe! Sparse file allow us to store a long string of zeroes without using up nearly that much space. If we were to read file2 in large chunks starting at the end, we could write those blocks to the end of file1 . file1 would immediately look (and read) as if it were the same size as both, but it would be corrupted until we were done because everything we hadn’t written would be full of zeroes.

Explaining all this is another answer in itself, but if you can do a spare allocation, you would be able to use only your chunk read size + a little bit extra in disk space to perform this operation. For a reference talking about sparse blocks in the middle of files, see http://lwn.net/Articles/357767/ or do a search involving the term, SEEK_HOLE .

Why is this «maybe» instead of «yes»? Two parts: you’d have to write your own tool (at least we’re on the right site for that), and sparse files are not universally respected by file systems and other processes alike. Fortunately you probably won’t have to worry about other processes respecting your file, but you will have to worry about setting the right flags and making sure your filesystem is amenable. Last of all, you’ll still be reading and re-writing the length of file2 , which isn’t what you want. This method does mean you can append with just a small amount of disk space, though, rather at using at least 2*file2 amount of space.

How to Merge Multiple Files in Linux?

Let’s look at the different ways in which you can merge multiple files in Linux. We’ll majorly use the cat command for this purpose. So let us begin!

For the rest of this tutorial we will consider three files. Let’s create these files:

We’ll use the cat command to create these files, but you can also use the touch/nano command to create and edit the files.

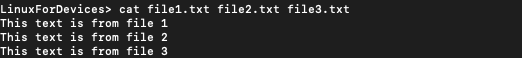

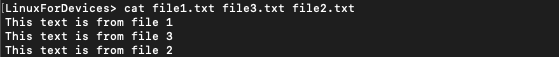

Displaying the content together

Since cat command is short for Concatenate, it is the first go to for concatenating the content together.

$ cat file1.txt file2.txt file3.txt

Note that the order in which the content appears is the order in which the files appear in the command. We can change the order in the command and verify.

Merge multiple files in Linux and store them in another file

To store the content that was displayed on the screen in the previous example, use the redirection operator. (>)

$ cat file1.txt file2.txt file3.txt > merge.txt

The output has been stored in a file. An important thing to note here is that cat command would create the file first if it doesn’t exist. The single redirection operator will overwrite the file rather than appending at the end. To append the content in the end consider the next example.

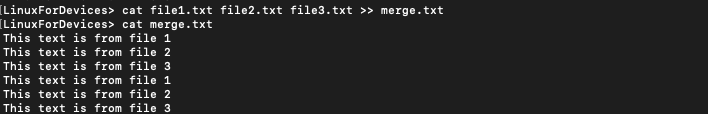

Appending content to an existing file

To append content after you merge multiple files in Linux to another file, use double redirection operator. (>>) along with cat command.

$ cat file1.txt file2.txt file3.txt >> merge.txt

Rather than overwriting the contents of the file, this command appends the content at the end of the file. Ignoring such fine detail could lead to an unwanted blunder.

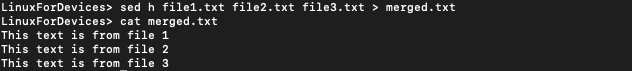

Using sed command to merge multiple files in Linux

Sed command, primarily used for performing text transformations and manipulation can also be used to merge files.

$ sed h file1.txt file2.txt file3.txt > merged.txt

The content from the files is stored in the hold buffer temporarily, usually used to store a pattern. It is then written to the specified file.

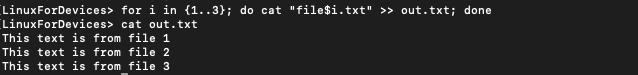

Automating the process using For loop

For loop can save the effort of explicitly mentioning the file names. This will only work if the filenames follow a pattern. Like in our case the file names follow the pattern: file.txt. This can be used to take advantage of for loop.

$ for i in ; do cat "file$i.txt" >> out.txt; done

The code simply exploits the fact that the files are named in a similar pattern. This should motivate you to think about how you want to name your files going forward.

Conclusion

In this tutorial, we covered some of the ways to merge multiple files in Linux. The process of merging is not exclusive to text files. Other files such as logs, system reports can also be merged. Using For loop to merge files saves a lot of effort if the number of files to be merged is too large.