The Initial Performance Of NVIDIA’s R515 Open-Source Linux GPU Kernel Driver

As outlined in yesterday’s extensive article about NVIDIA’s new open-source Linux kernel GPU driver, currently for consumer GeForce RTX GPUs the driver is considered of «alpha quality» while NVIDIA’s initial focus has been on data center GPU support. In any event with having lots of Turing/Ampere GPUs around, I’ve been trying out this new open-source Linux kernel driver on the consumer GPUs. In particular, I’ve been curious about the performance of using this open-source kernel driver relative to the default, existing closed-source kernel driver. Here are some early benchmarks.

Looking at the license information from modinfo nvidia will tell whether you are using the open-source (MIT/GPL dual licensed) driver or not.

My testing of the NVIDIA 515.43.04 beta driver while opting for the open-source kernel driver has been going well. I’ve tried several different GeForce RTX GPUs while for this article I am benchmarking with the high-end GeForce RTX 3090.

The quick primer on opting to use this open-source kernel driver with the closed-source driver components is passing the -m=kernel-open argument to the NVIDIA driver installer. This will build the open-source kernel driver for your system rather than using the default binary modules. In addition to that though, you need to set the NVreg_OpenRmEnableUnsupportedGpus=1 module option so this «alpha quality» driver will load for the consumer/workstation GPUs. With these two alterations made, the NVIDIA Turing/Ampere GPUs should successfully initialize with the open-source kernel driver build.

One additional tip is that if you normally just use «nomodeset» at the kernel command-line for preventing the Nouveau DRM driver from loading so you can gracefully use the NVIDIA proprietary driver stack, this interferes with using the open-source kernel driver. On one of my systems nomodeset was set and worked fine with the closed driver but when booting with the open driver, the screen wouldn’t light up and when SSH’ing in there were many NVRM messages around «RmInitAdapter failed» and «rm_init_adapter failed», etc. Switching to just blacklisting the Nouveau DRM driver rather than using the nomodeset option works around that issue.

With the GeForce RTX 3090 I was quite curious to see how the performance was for this open-source kernel driver code against the closed-source driver. All while using the same OpenGL/Vulkan/OpenCL/CUDA NVIDIA 515.43.04 user-space driver components each time. Since, again, the consumer GPU support is currently considered «alpha quality» and NVIDIA acknowledging that there would be performance issues to ultimately address with this open-source kernel driver — including some power management features yet to be implemented.

So I fired up a number of Linux gaming benchmarks followed by GPU compute benchmarks for looking at the RTX 3090 difference between these two kernel driver options.

How to improve the performance of your nVidia card in Linux

If you are one of those who use the computer simply to view your mails, surf the internet or edit some other text files, the free Nouveau drivers will be more than enough. Now, if yours are games, video editing or HD movie playback, then in that case there is no escape: proprietary drivers are the best answer, for now.

Even so, the proprietary drivers do not have the same performance as the Windows ones. To get a little closer to the latter, it is necessary to change some settings.

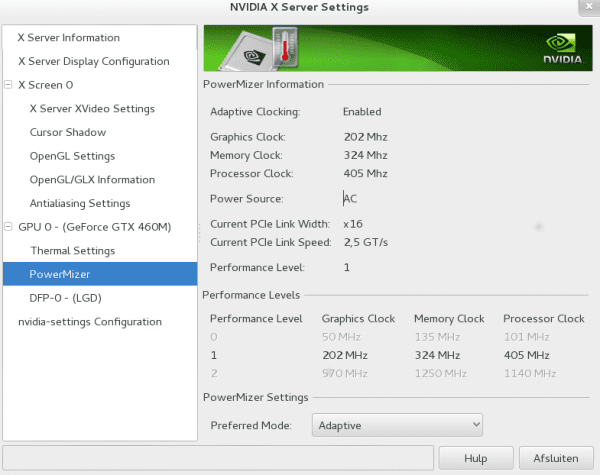

The setting to be changed is called «PowerMizer». Its function is to adapt the performance of the card according to the needs of the moment or based on the source of electrical energy (battery or current).

To get a good idea of what I’m saying, you can open nvidia settings from a terminal and access the tab PowerMizer.

nvidia-settings: tab to configure powermizer

Ideally, you should be able to change PowerMizer settings directly from nvidia-settings, but for some reason it doesn’t save the changes. Our goal will be to change the option PreferredMode de Adaptive a Prefer Maximum Performance. How to get it? Configuring our Xorg configuration file.

1. Open a terminal and run:

sudo nano /etc/X11/xorg.conf.d/20-nvidia.conf

according to your preference.

2. In the Device section add a line specifying the PowerMizer configuration that best suits your needs:

# "adaptive" for any power source Option "RegistryDwords" "PowerMizerEnable = 0x1; PerfLevelSrc = 0x2233; PowerMizerDefault = 0x3" # batt = max power save, AC = max power save Option "RegistryDwords" "PowerMizerEnable = 0x1; PerfLevelSrc = 0x3333 "# batt = adaptive, AC = max performance (my favorite) Option" RegistryDwords "" PowerMizerEnable = 0x1; PerfLevelSrc = 0x3322; PowerMizerDefaultAC = 0x1 "# batt = max power save, AC = max performance Option" RegistryDwords "" PowerMizerEnable = 0x1; PerfLevelSrc = 0x2222; PowerMizerDefault = 0x3; PowerMizerDefaultAC = 0x1 "# batt = max power save, AC = adaptive Option" RegistryDwords "" PowerMizerEnable = 0x1; PerfLevelSrc = 0x2222; PowerMizerDefaultizer = 0x3; PowerMizerDefaultizer = 0x3; PowerMizerDefaultMXNUMX "

The preceding lines are mutually exclusive. That is, you have to choose one and add it in the Device section of your Xorg configuration file.

3. In my case, as my computer is a PC (connected to the current), I applied the second option:

# batt = adaptive, AC = max performance (my favorite) Option "RegistryDwords" "PowerMizerEnable = 0x1; PerfLevelSrc = 0x3322; PowerMizerDefaultAC = 0x1"

My full configuration file was left so.

In this way, I ensured the maximum performance of my humble nVidia Geforce 7200.

4. Once the changes are made, reboot.

In case it doesn’t work, some users have stated that running .

nvidia-settings -a [gpu: 0] / GPUPowerMizerMode = 1

… Can correct the problem. The point is that this command should be executed every time we start the computer. Likewise, that is not very complicated either, although it varies according to the desktop environment you use (KDE, XFCE, etc.).

Finally, one last comment. You may not notice much of a difference in performance when doing «wild and common» use (web browsing, office automation, etc.) of your card. In my case, this trick has allowed me to eliminate the so-called «flickering» or «chopping» in the playback of HD videos and a better performance in Wine games.

In an upcoming installment, I’ll share an additional trick to permanently remove flickering from HD video playback without removing the Compton window composer.

The content of the article adheres to our principles of editorial ethics. To report an error click here.

Full path to article: From Linux » Tutorials / Manuals / Tips » How to improve the performance of your nVidia card in Linux