- How to list the size of each file and directory and sort by descending size in Bash?

- 12 Answers 12

- PS:

- Command

- Output

- Explanation

- ncdu

- Размеры папок и дисков в Linux. Команды df и du

- Свободное место на диске (df)

- Опция -h

- Размер конкретного диска

- Размер папок на диске (du)

- Размер конкретной папки:

- Размеры файлов и папок внутри конкретной папки:

- How can I calculate the size of a directory?

How to list the size of each file and directory and sort by descending size in Bash?

I found that there is no easy to get way the size of a directory in Bash? I want that when I type ls —

What exactly do you mean by the «size» of a directory? The number of files under it (recursively or not)? The sum of the sizes of the files under it (recursively or not)? The disk size of the directory itself? (A directory is implemented as a special file containing file names and other information.)

@KeithThompson @KitHo du command estimates file space usage so you cannot use it if you want to get the exact size.

@ztank1013: Depending on what you mean by «the exact size», du (at least the GNU coreutils version) probably has an option to provide the information.

12 Answers 12

Simply navigate to directory and run following command:

OR add -h for human readable sizes and -r to print bigger directories/files first.

du -a -h --max-depth=1 | sort -hr du -h requires sort -h too, to ensure that, say 981M sorts before 1.3G ; with sort -n only the numbers would be taken into account and they’d be the wrong way round.

This doesn’t list the size of the individual files within the current directory, only the size of its subdirectories and the total size of the current directory. How would you include individual files in the output as well (to answer OP’s question)?

@ErikTrautman to list the files also you need to add -a and use —all instead of —max-depth=1 like so du -a -h —all | sort -h

Apparently —max-depth option is not in Mac OS X’s version of the du command. You can use the following instead.

Unfortunately this does not show the files, but only the folder sizes. -a does not work with -d either.

To show files and folders, I combined 2 commands: l -hp | grep -v / && du -h -d 1 , which shows the normal file size from ls for files, but uses du for directories.

(this willnot show hidden (.dotfiles) files)

Use du -sm for Mb units etc. I always use

because the total line ( -c ) will end up at the bottom for obvious reasons 🙂

PS:

- See comments for handling dotfiles

- I frequently use e.g. ‘du -smc /home// | sort -n |tail’ to get a feel of where exactly the large bits are sitting

du —max-depth=1|sort -n or find . -mindepth 1 -maxdepth 1|xargs du -s|sort -n for including dotfiles too.

@arnaud576875 find . -mindepth 1 -maxdepth 1 -print0 | xargs -0 du -s | sort -n if some of the found paths could contain spaces.

This is a great variant to get a human readable view of the biggest: sudo du -smch * | sort -h | tail

Command

Output

3,5M asdf.6000.gz 3,4M asdf.4000.gz 3,2M asdf.2000.gz 2,5M xyz.PT.gz 136K xyz.6000.gz 116K xyz.6000p.gz 88K test.4000.gz 76K test.4000p.gz 44K test.2000.gz 8,0K desc.common.tcl 8,0K wer.2000p.gz 8,0K wer.2000.gz 4,0K ttree.3 Explanation

- du displays «disk usage»

- h is for «human readable» (both, in sort and in du)

- max-depth=0 means du will not show sizes of subfolders (remove that if you want to show all sizes of every file in every sub-, subsub-, . folder)

- r is for «reverse» (biggest file first)

ncdu

When I came to this question, I wanted to clean up my file system. The command line tool ncdu is way better suited to this task.

Just type ncdu [path] in the command line. After a few seconds for analyzing the path, you will see something like this:

$ ncdu 1.11 ~ Use the arrow keys to navigate, press ? for help --- / --------------------------------------------------------- . 96,1 GiB [##########] /home . 17,7 GiB [# ] /usr . 4,5 GiB [ ] /var 1,1 GiB [ ] /lib 732,1 MiB [ ] /opt . 275,6 MiB [ ] /boot 198,0 MiB [ ] /storage . 153,5 MiB [ ] /run . 16,6 MiB [ ] /etc 13,5 MiB [ ] /bin 11,3 MiB [ ] /sbin . 8,8 MiB [ ] /tmp . 2,2 MiB [ ] /dev ! 16,0 KiB [ ] /lost+found 8,0 KiB [ ] /media 8,0 KiB [ ] /snap 4,0 KiB [ ] /lib64 e 4,0 KiB [ ] /srv ! 4,0 KiB [ ] /root e 4,0 KiB [ ] /mnt e 4,0 KiB [ ] /cdrom . 0,0 B [ ] /proc . 0,0 B [ ] /sys @ 0,0 B [ ] initrd.img.old @ 0,0 B [ ] initrd.img @ 0,0 B [ ] vmlinuz.old @ 0,0 B [ ] vmlinuz Delete the currently highlighted element with d , exit with CTRL + c

ls -S sorts by size. Then, to show the size too, ls -lS gives a long ( -l ), sorted by size ( -S ) display. I usually add -h too, to make things easier to read, so, ls -lhS .

Ah, sorry, that was not clear from your post. You want du , seems someone has posted it. @sehe: Depends on your definition of real — it is showing the amount of space the directory is using to store itself. (It’s just not also adding in the size of the subentries.) It’s not a random number, and it’s not always 4KiB.

find . -mindepth 1 -maxdepth 1 -type d | parallel du -s | sort -n I think I might have figured out what you want to do. This will give a sorted list of all the files and all the directories, sorted by file size and size of the content in the directories.

(find . -depth 1 -type f -exec ls -s <> \;; find . -depth 1 -type d -exec du -s <> \;) | sort -n [enhanced version]

This is going to be much faster and precise than the initial version below and will output the sum of all the file size of current directory:

echo `find . -type f -exec stat -c %s <> \; | tr '\n' '+' | sed 's/+$//g'` | bc the stat -c %s command on a file will return its size in bytes. The tr command here is used to overcome xargs command limitations (apparently piping to xargs is splitting results on more lines, breaking the logic of my command). Hence tr is taking care of replacing line feed with + (plus) sign. sed has the only goal to remove the last + sign from the resulting string to avoid complains from the final bc (basic calculator) command that, as usual, does the math.

Performances: I tested it on several directories and over ~150.000 files top (the current number of files of my fedora 15 box) having what I believe it is an amazing result:

# time echo `find / -type f -exec stat -c %s <> \; | tr '\n' '+' | sed 's/+$//g'` | bc 12671767700 real 2m19.164s user 0m2.039s sys 0m14.850s Just in case you want to make a comparison with the du -sb / command, it will output an estimated disk usage in bytes ( -b option)

As I was expecting it is a little larger than my command calculation because the du utility returns allocated space of each file and not the actual consumed space.

[initial version]

You cannot use du command if you need to know the exact sum size of your folder because (as per man page citation) du estimates file space usage. Hence it will lead you to a wrong result, an approximation (maybe close to the sum size but most likely greater than the actual size you are looking for).

I think there might be different ways to answer your question but this is mine:

ls -l $(find . -type f | xargs) | cut -d" " -f5 | xargs | sed 's/\ /+/g'| bc It finds all files under . directory (change . with whatever directory you like), also hidden files are included and (using xargs ) outputs their names in a single line, then produces a detailed list using ls -l . This (sometimes) huge output is piped towards cut command and only the fifth field ( -f5 ), which is the file size in bytes is taken and again piped against xargs which produces again a single line of sizes separated by blanks. Now take place a sed magic which replaces each blank space with a plus ( + ) sign and finally bc (basic calculator) does the math.

It might need additional tuning and you may have ls command complaining about arguments list too long.

Размеры папок и дисков в Linux. Команды df и du

Рассмотрим, как используя команды df и du просматривать свободное место на дисках и размеры папок в Linux.

Свободное место на диске (df)

Для просмотра свободного и занятого места на разделах диска в Linux можно воспользоваться командой df.

Первым делом можно просто ввести команду df без каких-либо аргументов и получить занятое и свободное место на дисках. Но по умолчанию вывод команды не очень наглядный — например, размеры выводятся в КБайтах (1К-блоках).

df Файл.система 1K-блоков Использовано Доступно Использовано% Cмонтировано в udev 1969036 0 1969036 0% /dev tmpfs 404584 6372 398212 2% /run /dev/sda9 181668460 25176748 147240368 15% / . /dev/sda1 117194136 103725992 13468144 89% /media/yuriy/5EB893BEB893935F /dev/sda6 144050356 121905172 14804772 90% /media/yuriy/2f24. d9075 Примечание: df не отображает информацию о не смонтированных дисках.

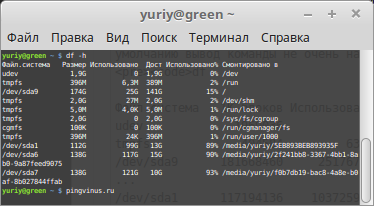

Опция -h

Опция -h (или —human-readable) позволяет сделать вывод более наглядным. Размеры выводятся теперь в ГБайтах.

df -h Файл.система Размер Использовано Дост Использовано% Cмонтировано в udev 1,9G 0 1,9G 0% /dev tmpfs 396M 6,3M 389M 2% /run /dev/sda9 174G 25G 141G 15% / . /dev/sda1 112G 99G 13G 89% /media/yuriy/5EB893BEB893935F /dev/sda6 138G 117G 15G 90% /media/yuriy/2f24. d9075Размер конкретного диска

Команде df можно указать путь до точки монтирования диска, размер которого вы хотите вывести:

df -h /dev/sda9 Файл.система Размер Использовано Дост Использовано% Cмонтировано в /dev/sda9 174G 25G 141G 15% /Размер папок на диске (du)

Для просмотра размеров папок на диске используется команда du.

Если просто ввести команду без каких либо аргументов, то она рекурсивно проскандирует вашу текущую директорию и выведет размеры всех файлов в ней. Обычно для du указывают путь до папки, которую вы хотите проанализировать.

Если нужно просмотреть размеры без рекурсивного обхода всех папок, то используется опция -s (—summarize). Также как и с df, добавим опцию -h (—human-readable).

Размер конкретной папки:

du -sh ./Загрузки 3,4G ./ЗагрузкиРазмеры файлов и папок внутри конкретной папки:

du -sh ./Загрузки/* 140K ./Загрузки/antergos-17.1-x86_64.iso.torrent 79M ./Загрузки/ubuntu-amd64.deb 49M ./Загрузки/data.zip 3,2G ./Загрузки/Parrot-full-3.5_amd64.iso 7,1M ./Загрузки/secret.tgzHow can I calculate the size of a directory?

The -s option means that it won’t list the size for each subdirectory, only the total size.

Actually du ‘s default unit is 512-byte blocks according to POSIX, and kilobytes on Linux (unless the environment variable POSIXLY_CORRECT is set) or with du -k .

if the directory is very big and have lots of subdirectories, it takes lots of time. almost 1 min.. is that normal? is there a way to get the size more rapidly?

While using a separate package such as ncdu may work well, the same comparison of many folders can be done, to some degree, by just giving du a list of folders to size up. For example to compare top-level directories on your system.

will list in human-readable format the sizes of all the directories, e.g.

656K ./rubberband 2.2M ./lame 652K ./pkg-config See the man page and the info page for more help:

-b , —bytes is equivalent to —apparent-size —block-size=1

The -c doesn’t make sense to use together with -s , right? -s only displays the size of the specified directory, that is the total size of the directory.

du -ahd 1 | sort -h will have a better visualization that sorted the items.

$ du -ahd 1 | sort -h 2.1M ./jinxing.oxps 2.1M ./jx.xps 3.5M ./instances_train2014_num10.json 5.9M ./realsense 7.8M ./html_ppt 8.5M ./pytorch-segmentation-toolbox 24M ./bpycv 24M ./inventory-v4 26M ./gittry 65M ./inventory 291M ./librealsense 466M . ls -ldh /etc drwxr-xr-x 145 root root 12K 2012-06-02 11:44 /etc

-l is for long listing ; -d is for displaying dir info, not the content of the dir, -h is for displaying size in huma readable format.

This isn’t correct, the person asking is clearly looking for footprint of a directory and it’s contents on disk. @sepp2k’s answer is correct.

The ls -ldh command only shows the size of inode structure of a directory. The metric is a reflection of size of the index table of file names, but not the actual size of the file content within the directory.

du -hax --max-depth=1 / | grep '3G' | sort -nr This helps find large directories to then sift through using du -sh ./*

You can use «file-size.sh» from the awk Velour library:

This gives a more accurate count than du. Unpack a tarball on two servers and use «du -s» (with or without —bytes) and you will likely see different totals, but using this technique the totals will match.

The original question asked the size, but did not specify if it was the size on disk or the actual size of data.

I have found that the calculation of ‘du’ can vary between servers with the same size partition using the same file system. If file system characteristics differ this makes sense, but otherwise I can’t figure why. The ‘ls|awk» answer that Steven Penny gave yields a more consistent answer, but still gave me inconsistent results with very large file lists.

Using ‘find’ gave consistent results for 300,000+ files, even when comparing one server using XFS and another using EXT4. So if you want to know the total bytes of data in all files then I suggest this is a good way to get it:

find /whatever/path -type f -printf "%s\n"|awk ' END '