- how to read file from line x to the end of a file in bash

- 7 Answers 7

- Why doesn't the POSIX API have an end-of-file function?

- 1 Answer 1

- How to Use Cat EOF on Linux

- The Cat Command

- End of file (EOF)

- Rules for the delimiter / TAG

- Examples of How to Use Cat EOF on Linux

- Example 1- Writing multiline strings to a file

- Example 2 – Assigning a multiline string to a bash variable

- Example 3 – Assigning the SQL input to a bash variable

- Example 4 – Piping multiline string

how to read file from line x to the end of a file in bash

I would like know how I can read each line of a csv file from the second line to the end of file in a bash script. I know how to read a file in bash:

while read line do echo -e "$line\n" done < file.csv But, I want to read the file starting from the second line to the end of the file. How can I achieve this?

7 Answers 7

-n, --lines=N output the last N lines, instead of the last 10 . If the first character of N (the number of bytes or lines) is a '+', print beginning with the Nth item from the start of each file, other- wise, print the last N items in the file. In English this means that:

tail -n 100 prints the last 100 lines

tail -n +100 prints all lines starting from line 100

where 2 is the number of line you wish to read from.

As bash is very quick, while rest of script is in bash anyway, I prefer bash only solutions. But sed is one of the lighter and quicker solution. Not for tail , but many time sed -ne //p could be quicker than grep .

Or else (pure bash).

This work even if linesToSkip == 0 or linesToSkip > file.csv's number of lines

Changed () for <> as gniourf_gniourf enjoin me to consider: First syntax generate a sub-shell, whille <> don't.

of course, for skipping only one line (as original question's title), the loop for (i=1;i--;));do read;done could be simply replaced by read :

There are many solutions to this. One of my favorite is:

(head -2 > /dev/null; whatever_you_want_to_do) < file.txt You can also use tail to skip the lines you want:

tail -n +2 file.txt | whatever_you_want_to_do head -n2 >/dev/null is a bad usage, cause depending on how STDIN is opened (block buffers): On my system, the command seq 1 10 | ( head -n2 >/dev/null ; cat ) dont give any output, while seq 1 10 >file && ( head -n2 >/dev/null ; cat )

Depending on what you want to do with your lines: if you want to store each selected line in an array, the best choice is definitely the builtin mapfile :

numberoflinestoskip=1 mapfile -s $numberoflinestoskip -t linesarray < file will store each line of file file , starting from line 2, in the array linesarray .

help mapfile for more info.

If you don't want to store each line in an array, well, there are other very good answers.

As F. Hauri suggests in a comment, this is only applicable if you need to store the whole file in memory.

Otherwise, you best bet is:

Notice: there's no subshell involved/needed.

I'm not sure that using mapfile is a must! While this implie to read the whole file into memory and is not simplier even if lines have to be read as arrays: while read -a lines;do . ;done will be simplier than having to split each arrays member from mapfile to new arrrays.

i=1 while read line do test $i -eq 1 && ((i=i+1)) && continue echo -e "$line\n" done < file.csv I would just get a variable.

#!/bin/bash i=0 while read line do if [ $i != 0 ]; then echo -e $line fi i=$i+1 done < "file.csv" UPDATE Above will check for the $i variable on every line of csv. So if you have got very large csv file of millions of line it will eat significant amount of CPU cycles, no good for Mother nature.

Following one liner can be used to delete the very first line of CSV file using sed and then output the remaining file to while loop.

sed 1d file.csv | while read d; do echo $d; done Why doesn't the POSIX API have an end-of-file function?

In the POSIX API, read() returns 0 to indicate that the end-of-file has been reached. Why isn't there a separate function that tells you that read() would return zero -- without requiring you to actually call read() ? Reason for asking: Since you have to call read() in order to discover that it will fail, this makes file reading algorithms more complicated and maybe slightly less efficient since they have to allocate a target buffer that may not be needed. What we might like to do.

The most interesting answer that I have seen (to a similar question): stackoverflow.com/a/5605161/86967. Need to think about whether or not similar logic is applicable to the POSIX API.

The case where the input stream size is an exact multiple of the buffer size is generally rare. Therefore, end-of-stream isn't particularly helpful under typical scenarios. I don't recall exactly, but at the time I asked this question, I may have been working with a case in which the stream was made up of packets/chunks of a very specific size (which was also large enough to be significant).

1 Answer 1

To gain perspective on why this might be the case, understand that end-of-stream is not inherently a permanent situation. A file's read pointer could be at the end, but if more data is subsequently appended by a write operation, then subsequent reads will succeed.

Example: In Linux, when reading from the console, a new line followed by ^D will cause posix::read() to return zero (indicating "end of file"). However, if the program isn't terminated, the program can continue to read (assuming additional lines are typed).

Since end-of-stream is not a permanent situation, perhaps it makes sense to not even have an is_at_end() function (POSIX does not). Unfortunately, this does put some additional burden on the programmer (and/or a wrapper library) to elegantly and efficiently deal with this complexity.

How to Use Cat EOF on Linux

Knowing how to use Cat EOF command is a useful skill to have for displaying the contents of a file. Here’s everything you need to know about this Linux command, with many examples too.

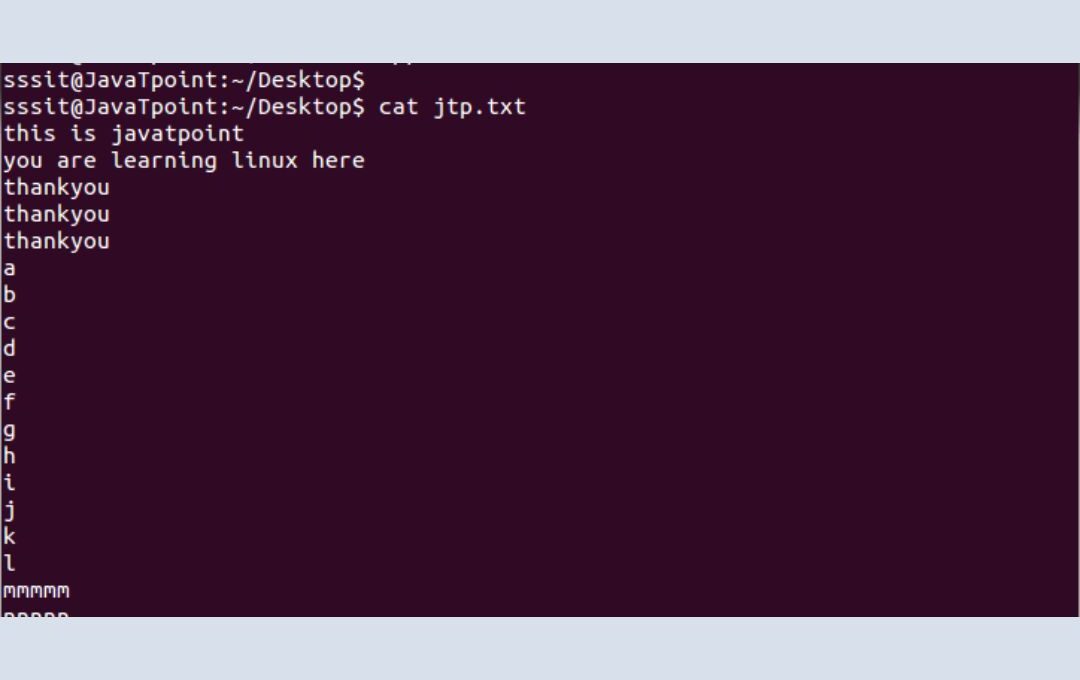

Cat is a widely used feature in Linux that allows displaying the contents of a file. In addition, this command line utility can concatenate the contents of a file. The tool can be combined with other commands such as awk, sed, and less. We will discuss how we can use cat and EOF to perform a range of valuable features, such as concatenating multiline strings.

The Cat Command

Cat command is a Linux utility that can put specified contents into the output stream. If no output stream is specified, it outputs the content to the standard output stream. The following command illustrates the output content of a file to standard output:

In the above command, the content of file.txt is output to the standard output stream. To redirect the content to another file, you can use the following command:

End of file (EOF)

The EOF character is used in several programming languages, such as C, Java, and Python. It represents the end of the file. In other words, whenever a compiler or interpreter encounters this character, it signifies that the end of the file has been reached. In the bash, the EOF is used similarly to specify the end of the file. When used with the cat operator, it serves a different purpose. It can be used to end the current input from the terminal (called here file). In the following few sections, we will demonstrate several examples of using EOF. The here document has the following format:

The document here is treated as a single word. It continues until the tag or the delimiter is encountered.

Rules for the delimiter / TAG

A tag such as EOF can be any string, upper or lower case letter. However, generally upper case is used by convention. Primarily, EOF or STOP is used as the tag. The tag should be on a separate line to be considered as a tag. Finally, the tag should have no leading or trailing spaces. Otherwise, it will be regarded as part of a string.

Examples of How to Use Cat EOF on Linux

We will now see several examples of using the cat command along with EOF . In particular, we will see four examples, i.e., assigning multiline input, assigning to a bash variable, assigning an SQL query, and piping multiple commands along with the cat.

Example 1- Writing multiline strings to a file

The cat command is a handy utility, and when combined with the EOF feature, the multiline string can be written to a file in bash. Type the bash command along with the left shift operator. Next, add the keyword used to terminate input. Then use the redirection operator and the name of the file to write to:

In the above command, we have told the bash to terminate the input when EOF is encountered. Here, EOF is the tag used to end the input. Note that a new file will be created if the file doesn’t exist. The

Example 2 – Assigning a multiline string to a bash variable

The cat command can be used to assign a multiline string to a bash variable as well. The following command sets a multiline string to a variable:

Here, the $() command is used to assign a bash variable.

Example 3 – Assigning the SQL input to a bash variable

Let’s see another example, where we assign the bash multiline input to a psql variable used to execute queries in SQL. The following command can be used to assign the variable to psql:

$ sql=$(cat The above SQL command selects all the employees with id equals 101. This SQL command is assigned to the SQL variable. This can further be used to execute queries on the terminal.

Example 4 – Piping multiline string

You can also pipe a multiline string to a command. The following example uses cat and EOF features to pipe the multiline string:

When running the above command, it will prompt you for the input. When the input encounters an EOF, it will terminate. The input is a pipe to grep command, which searches for the keyword java and then redirects them to the file java.txt.

Tip: The redirection operator > redirects the input to the file overwriting existing contents. The >> operator, however, appends the content to the file.

We discussed how the cat command could be used in combination with EOF to perform a range of practical operations. For instance, we can write multiline strings to a file and pipe multiline strings in bash, all of which you can find in the multiple examples we have highlighted above.

If this guide helped you, please share it.

Husain is a staff writer at Distroid and has been writing on all things Linux and cybersecurity for over 10 years. He previously worked as a technical writer for wikiHow. In his past time, he loves taking tech apart and see what makes them tick, without necessarily putting it all back together. LinkedIn

Leave a Reply

You must be logged in to post a comment.