Linux read log file and filter to get the same type of log messages only once

In my log file I have three type of log messages: info, warning, and error. I want to grab only the error messages, but as there are different type of error messages and the same error message may appear more than once in the log file, I only want to grab each type of error only once. What command can I use in Ubuntu terminal? I have tried:

grep -E 'level=error' server.log | sort --unique But this gives me ‘info’ and ‘warning’ messages too. I then used this command but still I get all three types of messages, not just the error messages.

grep 'error' server.log | uniq -f 1 the argument -f 1 is to skip the timestamp field since it will always be unique. For example, my log messages are:

. . . 11-03-2020 11:53:32" level=info msg="Starting up" file="etc/load/startwith.txt" 11-03-2020 11:53:33" level=info msg="Started" file="etc/load/startwith.txt" 11-03-2020 11:54:29" level=warning msg="Some fields missing" file="etc/load/startwith.php" 11-03-2020 11:54:47" level=info msg="Started the process" file="etc/load/startwith.php" 11-03-2020 11:54:51" level=info msg="Connecting to database" file="etc/db/dbinfo.php" 11-03-2020 11:54:53" level=error msg="Database connection failed" file="etc/db/dbinfo.php" 11-03-2020 13:26:22" level=info msg="Started back-up process" file="etc/load/startwith.php" 11-03-2020 13:26:23" level=info msg="Starting up" file="etc/load/startwith.txt" 11-03-2020 13:26:26" level=error msg="Start up failed" file="etc/db/startwith.php" 11-03-2020 13:26:27" level=info msg="Starting up" file="etc/load/startwith.txt" 11-03-2020 13:26:31" level=error msg="Start up failed" file="etc/db/startwith.php" 11-03-2020 13:26:32" level=info msg="Starting up" file="etc/load/startwith.txt" 11-03-2020 13:26:35" level=warning msg="Duplicate fields found" file="etc/load/startwith.php" 11-03-2020 13:26:36" level=info msg="Started the process" file="etc/load/startwith.php" 11-03-2020 13:26:37" level=info msg="Connecting to database" file="etc/db/dbinfo.php" 11-03-2020 13:26:38" level=info msg="Success. Connected to the database" file="etc/db/db-success.php" 11-03-2020 13:26:38" level=info msg="Inserting data to database" file="etc/db/dboperation.php" 11-03-2020 13:26:39" level=warning msg="Null fields found" file="etc/db/dboperation.php" 11-03-2020 13:26:39" level=info msg="Data inserted" file="etc/db/dboperation.php" 11-03-2020 13:26:39" level=info msg="Disconnected" file="etc/db/dboperation.php" 11-03-2020 13:26:43" level=info msg="Inserting data to database" file="etc/db/dboperation.php" 11-03-2020 13:26:43" level=error msg="Required data missing" file="etc/db/dboperation.php" 11-03-2020 13:26:44" level=info msg="Inserting data to database" file="etc/db/dboperation.php" 11-03-2020 13:26:44" level=error msg="Required data missing" file="etc/db/dboperation.php" . . . The expected output for Errors from the above logs (3 different types of errors, not total error occurrences) would be:

11-03-2020 11:54:53" level=error msg="Database connection failed" file="etc/db/dbinfo.php" 11-03-2020 13:26:31" level=error msg="Start up failed" file="etc/db/startwith.php" 11-03-2020 13:26:44" level=error msg="Required data missing" file="etc/db/dboperation.php" So essentially I need to filter the log file to get the error messages and having only one error for each type.

How can dmesg content be logged into a file?

I’m running a Linux OS that was built from scratch. I’d like to save the kernel message buffer (dmesg) to a file that will remain persistent between reboots. I’ve tried running syslogd but it just opened a new log file, /var/log/messages, with neither the existing kernel message buffer, nor any new messages the kernel generated after syslogd was launched. How can the kernel message buffer be saved to a persistent log file?

3 Answers 3

You need to look at either /etc/rsyslog.conf or /etc/syslog.conf . If you have a line early on such as:

Everything, including the stuff from dmesg, should go to that file. To target it better:

If that fails for some reason, you could periodically (e.g. via cron):

Depending on how big the dmesg buffer is (this is compiled into the kernel, or set via the log_buf_len parameter) and how long your system has been up, that will keep a record of the kernel log since it started.

If you want to write the dmesg output continuously to a file use the -w (—follow) flag.

+1 It is probably worth mention that dmesg uses a ring buffer so that it doesn’t grow without bound and is held within the kernel so that messages can be logged prior to things like the filesystem is even up.

If you use systemd then you can get all the information from the systemd journal using journalctl -k . syslog and rsyslog are not necessary if you use systemd.

PopSicle does this I use the old msdos redirect and it is redricted into a .csv file which is opened in a spread sheet by LibreOffice Calc in terminal try something like this

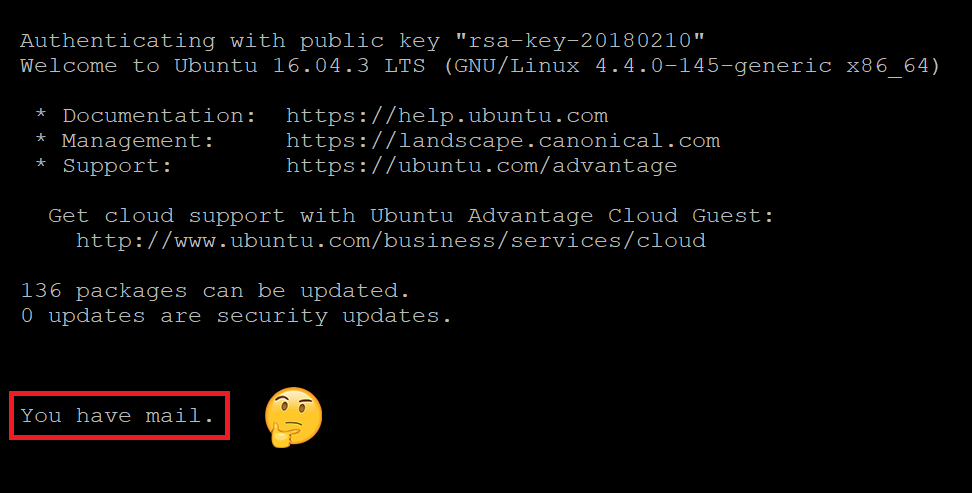

dmesg > /path to where you want the file written/File-Name.csv dmesg > /media/joe/Data/Z-Back/Script-Files/Dmesg-Output.csv echo "Dmesg-to-CSV.sh"" the script file" #!/bin/bash echo "This is a shell script" SOMEVAR='I am done running dmesg and redirecting to /media/joe/Data/B-Back/Script-Files/Dmesg-Output.csv' echo "$SOMEVAR" dmesg > /media/joe/Data/Z-Back/Script-Files/Dmesg-Output.csv echo "Dmesg-CSV.desktop"" the icon file" [Desktop Entry] Encoding=UTF-8 Name=Dmesg-to-CSV.sh Comment=Launch DirSyncPro Exec=gnome-terminal -e /media/joe/Data/Z-Back/Script-Files/Dmesg-to-CSV.sh Icon=utilities-terminal Type=Application Name[en_US]=Dmesg-CSV.desktop echo "both the .sh file and .desktop file are stored in the same directory as the .csv output file" “You have mail” – How to Read Mail in Linux Command Line

This message indicates that there is a message in your spool file. Usually the spool file is in a very simple mbox format, so you can open it in a text viewer like less or with the mail program. In most cases, this is not a message from a long lost lover, but instead a system message generated by your Linux mail service. (sad)

View Spool File

Use the following command to read the mail for the currently logged in user. The $(whoami) variable returns the currently logged in user.

You can use the ↑ and ↓ arrows on your keyboard to scroll through the spool file.

Press uppercase G to scroll to the bottom of the file and lowercase q to quit.

If you wish to read another user’s mail, just enter their user name.

Delete Spool File

You can simply delete the /var/mail/username file to delete all emails for a specific user. The $(whoami) variable returns the currently logged in user.

Using the mail Program

You can also use the mail program to easily list and view messages in your spool file. If mail is not installed, you can install it with sudo apt install mailutils .

Messages will be listed with a corresponding number:

mail "/var/mail/john": 6 messages 6 new >N 1 Mail Delivery Syst Thu Feb 15 21:12 80/2987 Undelivered Mail Returned to Sender N 2 Mail Delivery Syst Fri Feb 16 00:09 71/2266 Undelivered Mail Returned to Sender N 3 Mail Delivery Syst Fri Feb 16 00:16 71/2266 Undelivered Mail Returned to Sender N 4 Mail Delivery Syst Fri Feb 16 00:21 71/2266 Undelivered Mail Returned to Sender N 5 Mail Delivery Syst Fri Feb 16 00:22 71/2266 Undelivered Mail Returned to Sender N 6 Mail Delivery Syst Fri Feb 16 00:24 75/2668 Undelivered Mail Returned to Sender ?

After the ? prompt, enter the number of the mail you want to read and press ENTER .

Press ENTER to scroll through the message line by line and press q and ENTER to return to the message list.

To exit mail , type q at the ? prompt and then press ENTER .

Delete All Messages using mail

To delete all messages using mail, after the ? prompt, type d * and press ENTER .

Let me know if this helped. Follow me on Twitter, Facebook and YouTube, or 🍊 buy me a smoothie.

p.s. I increased my AdSense revenue by 200% using AI 🤖. Read my Ezoic review to find out how.