- Linux — run multiple parallel commands, print sequential output

- 3 Answers 3

- How to run multiple commands in parallel and see output from both?

- 6 Answers 6

- Linux Run Multiple Commands in Parallel

- Methods of Running Multiple Commands in Parallel in Linux Mint 20

- Method #1: Using the Semicolon Operator

- Method #2: Using a Bash Script

- Conclusion

- About the author

- Karim Buzdar

Linux — run multiple parallel commands, print sequential output

I am a bit new to bash, and I need to run a short command several hundred times in parallel but print output sequentially. The command prints a fairly short output to stdout that is I do not want to loose or for it to get garbled/mixed up with the output of another thread. Is there a way in Linux to run several commands (e.g. no more than N threads in parallel) so that all command outputs are printed sequentially (in any order, as long as they don’t overlap). Current bash script (full code here)

declare -a UPDATE_ERRORS UPDATE_ERRORS=( ) function pull < git pull # Assumes current dir is set if [[ $? -ne 0 ]]; then UPDATE_ERRORS+=("error message") fi for f in extensions/*; do if [[ -d $f ]]; then ########## This code should run in parallel, but output of each thread ########## should be cached and printed sequentially one after another ########## pull function also updates a global var that will be used later pushd $f >/dev/null pull popd > /dev/null fi done if [[ $ -ne 0 ]]; then # print errors again fi Thanks, looks promising, but how would I make each thread add an error message to the global array in case of a failure?

Point 1) Add -k to your invocation of GNU Parallel to keep the outputs in order. Point 2) Define a function, and be sure to export it, and pass the function to GNU Parallel to execute — inside the function, append the error message to your array. gnu.org/software/parallel/…

3 Answers 3

You can use flock for this. I have emulate the similar situation to test. do_the_things proc generates overlapping in time output. In a for loop text generation called several times simultaneously. Output should mess, but output is feeded to procedure locked_print which waits until lock is freed and then prints recieved input to stdout. Exports are needed to call procedure from inside of a pipe.

#!/bin/bash do_the_things() < rand="$((RANDOM % 10))" sleep $rand for i in `seq 1 10`; do sleep 1; echo "$-$i"; done > locked_print() < echo Started flock -e testlock cat >export -f do_the_things export -f locked_print for f in a b c d; do (do_the_things | locked_print) & done wait @user313294 If there are lots of things to do, say 500, it will just slam them all on to the CPUs to do at once, won’t it? Sometimes that actually slows things up when tasks compete for CPU/network/disk bandwidth. +1 for a nice, tidy solution.

@MarkSetchell I think 499 of them will fill their pipe buffers and will be blocked on io until lock is freed while 1 will pipe its output out.

Try something like this. I don’t have/use git so I have done a dummy command to simulate it in my version.

#!/bin/bash declare -a ERRORS ERRORS=( ) function pull < cd "$1" echo Starting pull in $1 for i in ; do echo "$1 Output line $i";done sleep 5 echo "GITERROR: Dummy error in directory $1" > export -f pull for f in extensions/*; do if [[ -d $f ]]; then ########## This code should run in parallel, but output of each thread ########## should be cached and printed sequentially one after another ########## pull function also updates a global var that will be used later echo $f fi done | parallel -k pull | tee errors.tmp IFS=$'\n' ERRORS=($(grep "^GITERROR:" errors.tmp)) rm errors.tmp for i in "$"; do echo $i done You will see that even if there are 4 directories to pull, the entire script will only take 5 seconds — despite executing 4 lots of sleep 5 .

How to run multiple commands in parallel and see output from both?

Which are both long-running processes. I want to see the output from both, interlaced in my terminal. It doesn’t matter if the results are a bit jumbled, I just like to see that they’re doing what they’re supposed to. Also, I want it to kill both processes when I press Ctrl+C . I’m trying

parallel . 'lsyncd lsyncd.lua' 'webpack --progress --color -w' which seems to be working, but I can’t see any output even though when I run those commands individually, they output something.

@DavidFoerster Also, if you’re talking about this question that’s the opposite of what I want to do. Pressing Ctrl+C or closing the terminal should kill the jobs. I don’t want to background them.

6 Answers 6

GNU Parallel defaults to postponing output until the job is finished. You can instead ask it to print output as soon there is a full line.

parallel --lb . 'lsyncd lsyncd.lua' 'webpack --progress --color -w' It avoids half-line mixing of the output:

parallel -j0 --lb 'echo <>;echo -n <>;sleep <>;echo <>' . 1 3 2 4 Spend 20 minutes reading chapter 1+2 of GNU Parallel 2018 (online, printed). Your command line will love you for it.

Will these prepend lines of output with labels allowing you to distinguish output lines from the two commands? If not, is there an option to do so? And will it preserve colors?

@Anomaly —tag/—tagstring/—ctag/—ctagstring will prepend the lines of each job. Colors is really the job of the program: parallel —tag ls —color . /bin /usr

Using parallel (in the moreutils package):

parallel -j 2 -- 'lsyncd lsyncd.lua' 'webpack --progress --color -w' Since the parallel process runs in the foreground, hitting CTRL + C will terminate all the processes running on top of it at once.

- -j : Use to limit the number of jobs that are run at the same time;

- — : separates the options from the commands.

% parallel -j 2 -- 'while true; do echo foo; sleep 1; done' 'while true; do echo bar; sleep 1; done' bar foo bar foo bar foo ^C % Aha. That parallel seems to be different from than the parallel package (GNU?). This one seems to work! The other one just kept saying «parallel: Warning: Input is read from the terminal. Only experts do this on purpose. Press CTRL-D to exit.».

& to the rescue. It launches the two processes in parallel.

lsyncd lsyncd.lua & webpack —progress —color -w

Didn’t read the kill part. A ctrl+C here would only terminate the second one. The process preceding the & runs in the background although it outputs on the stdout .

The shortest way to terminate both processes is: 1. Type Ctrl+C once. It kills the foreground process. 2. Type fg and type Ctrl+C again. It brings the background process to foreground and kills it too.

Oh poop. I want them both to die. Need to keep this simple so it’s easy to start and stop for the linux noob.

@mpen can’t think of an easy way to kill the process in background. Either use the jobs thing in the other answer or run ps -a in the shell, that will tell the pid that you can kill manually.

You have more options. Run the first command and press Ctrl-Z. This puts the command to wait in the background. Now type bg and it will run in background. Now run the second command and press Ctrl-Z again. Type bg again and both programs will run in background.

Now you can type jobs and it will print which commands are running in background. Type fg to put program in foreground again. If you omit the job number it will put the last job in the foreground. When the job is in foreground you can stop it with Ctrl-C. I don’t know how you would stop both with one Ctrl-C.

You can also add & at the end which puts it running in the background immediately without Ctrl-Z and bg . You can still bring it in foreground with fg .

Linux Run Multiple Commands in Parallel

Parallel processing is the concept of running multiple processes simultaneously. This concept is the crux of today’s modern computer systems. In the very same manner, multiple commands can also be made to run in parallel, and today, we will learn how to do this on a Linux Mint 20 system.

Methods of Running Multiple Commands in Parallel in Linux Mint 20

For running multiple commands in parallel in Linux Mint 20, you can make use of either of the following methods:

Method #1: Using the Semicolon Operator

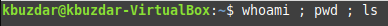

For using the semicolon operator for running multiple commands in parallel in Linux Mint 20, you need to separate multiple commands that you want to run in parallel with semicolon in your terminal in the manner shown below:

Here, you can have as many commands as you want to run in parallel separated by semicolons.

When you press the “Enter” key to execute this statement in your terminal, you will be able to notice from the output that all of these commands have been executed in parallel, as shown in the following image:

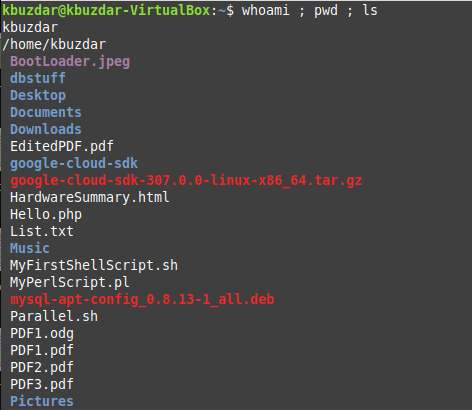

Method #2: Using a Bash Script

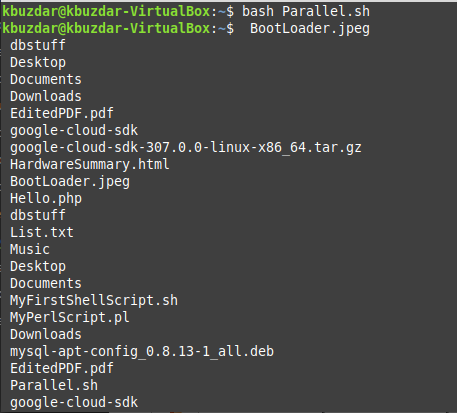

For using a Bash script to run multiple commands in parallel in Linux Mint 20, you will have to create a Bash file, i.e., a file with the “.sh” extension in your Home directory. You can name this file as per your preference. In our case, we have named it “Parallel.sh”. In this file, you need to state all those commands that you want to run in parallel in separate lines followed by the “&” symbol. We wanted to run the “ls” command thrice in parallel, as you can see from the image shown below:

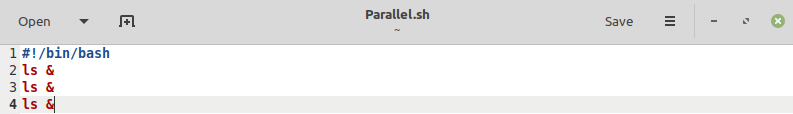

After writing this script and saving it, you can execute it in the terminal with the following command:

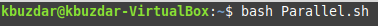

When you hit the “Enter” key to execute this script, you will be able to analyze from the output that the specified commands in your Bash script have run in parallel, as shown in the image below:

Conclusion

In this article, we taught you the two different methods of running multiple commands in parallel on a Linux Mint 20 system. The first method was quite simple as you simply had to run all the commands separated by semicolons in your terminal. However, for the second method, you had to create a Bash script for serving the very same purpose.

About the author

Karim Buzdar

Karim Buzdar holds a degree in telecommunication engineering and holds several sysadmin certifications. As an IT engineer and technical author, he writes for various web sites. He blogs at LinuxWays.