LVM Volume Group not found

I had a very similar problem. After a failed installation of a new kernel (mainly because the /boot partition ran out of space) I manually updated initramfs and after rebooting, initramfs wouldn’t prompt the decryption process for the encrypted partition. I was getting errors of the sort Volume group “vg0” not found and the initramfs prompt, which is similar to a terminal but with limited capabilities.

For step 1 I used the recipe in this post in order to delete old kernels: https://askubuntu.com/a/1391904/1541500. A note on step 1: if you cannot boot onto any older kernel (as it was my case), you night need to perform those steps as part of step 2 (live CD session), after performing the chroot command.

For step 2 I booted from the live CD and opened a terminal. Then I mounted the system, installed the missing packages and prompted the reinstallation of the last kernel (which automatically updates the initramfs and grub cfg).

In the following I list the commands I used in the terminal of the live CD session for step 2 in order to fix the system.

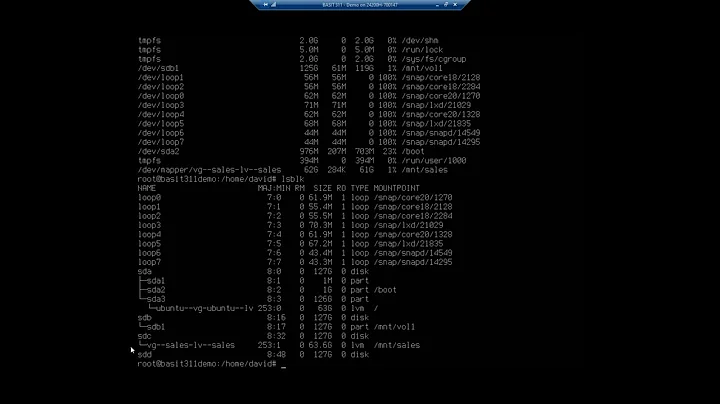

In my case I have the following partitions:

- /dev/sda1 as /boot/efi with fat32

- /dev/sda2 as /boot with ext4

- /dev/sda3 as vg0 with lvm2 -> this is my encrypted partition, with my kubuntu installation.

Also important to mention is that my encrypted partition is listed as CryptDisk in /etc/crypttab . This name is necessary in order to decrypt the partition using cryptsetup luksOpen . Once decrypted, vg0 has 3 partitions: root , home and swap .

Now back to the commands to run in the LIVE CD terminal:

sudo cryptsetup luksOpen /dev/sda3 CryptDisk # open encrypted partition sudo vgchange -ay sudo mount /dev/mapper/vg0-root /mnt # mount root partition sudo mount /dev/sda2 /mnt/boot # mount boot partition sudo mount -t proc proc /mnt/proc sudo mount -t sysfs sys /sys sudo mount -o bind /run /mnt/run # to get resolv.conf for internet access sudo mount -o bind /dev /mnt/dev sudo chroot /mnt apt-get update apt-get dist-upgrade apt-get install lvm2 cryptsetup-initramfs cryptsetup apt-get install --reinstall linux-image-5.4.0-99-generic linux-headers-5.4.0-9 9-generic linux-modules-5.4.0-99-generic linux-tools-5.4.0-99-generic After successful reboot I updated all other kernels with update-initramfs -c -k all .

Related videos on Youtube

sebmal

researcher, software dev, open source enthusiast.

Updated on September 18, 2022

Comments

Begin: Running /scripts/init-premount … done. Begin: Mounting root file system … Begin: Running /scripts/local-top … Volume group “ubuntu-vg” not found Cannot process volume group ubuntu-vg Begin: Running /scripts/local-premount … . Begin: Waiting for root file system … Begin: Running /scripts/local-block … mdadm: No arrays found in config file or automatically Volume group “ubuntu-vg” not found Cannot process volume group ubuntu vg mdadm: No arrays found in config file or automatically # The system drops to initramfs shell (busybox) where lvm vgscan doesn't find any volume groups and ls /dev/mapper only shows only one entry control . When i boot the live SystemRescueCD, the Volume Group can be found and the LV is available as usual in /dev/mapper/ubuntu--vg-ubuntu--lv . I am able to mount it and the VG is set to active. So the VG and the LV look fine but something seems broken during the boot process. Ubuntu 20.04 Server, LVM setup on top of hardware raid1+0 with 4 SSDs. The hardware RAID controller is HPE Smart Array P408i-p SR Gen10 controller with firmware version 3.00. Four HPE SSDs model MK001920GWXFK in a RAID 1+0 configuration. The server model is HPE Proliant DL380 Gen10. No software raid, no encryption. Any hints how to find the error and fix the problem? EDIT I: /proc/partitions looks good

- /dev/sdc1 is /boot/efi

- /dev/sdc2 is /boot

- /dev/sdc3 is the PV

Booting from an older kernel version worked once until executing apt update && apt upgrade . After the upgrade the older kernel had the same issue.

In the module /proc/modules I can find the following entry: smartpqi 81920 0 - Live 0xffffffffc0626000

No output for lvm pvs in initramfs shell.

Output for lvm pvchange -ay -v

Output for lvm pvchange -ay --partial vg-ubuntu -v

PARTIAL MODE. Incomplete logical volumes will be processed. VG name on command line not found in list of VGs: vg-ubuntu Volume group "vg-ubuntu" not found Cannot process volume group vg-ubuntu There is a second RAID controller with HDDs connected to another PCI slot; same model P408i-p SR Gen10. There is a volume group named "cinder-volumes" configured on top of this RAID. But this VG can't be found either in initramfs.

Here is a link to the requested files from the root FS:

- /mnt/var/log/apt/term.log

- /mnt/etc/initramfs-tools/initramfs.conf

- /mnt/etc/initramfs-tools/update-initramfs.conf

In the SystemRescueCD I mounted the LV / (root), /boot and /boot/efi and chrooted into the LV /. All the mounted volumes have enough disk space left (disk space used < 32%).

The output of update-initramfs -u -k 5.4.0.88-generic is:

update-initramfs: Generating /boot/initrd.img-5.4.0.88-generic W: mkconf: MD subsystem is not loaded, thus I cannot scan for arrays. W: mdadm: failed to auto-generate temporary mdadm.conf file The image /boot/initrd.img-5.4.0-88-generic has an updated last modified date.

Problem remains after rebooting. The boot initrd parameter in the grub menu config /boot/grub/grub.cfg points to /initrd.img-5.4.0-XX-generic , where XX is different for each menu entry, i.e. 88, 86 and 77.

In the /boot directory I can find different images (?)

vmlinuz-5.4.0-88-generic vmlinuz-5.4.0-86-generic vmlinuz-5.4.0-77-generic The link /boot/initrd.img points to the latest version /boot/initrd.img-5.4.0-88-generic .

Since no measure has led to the desired result and the effort to save the system is too great, I had to completely rebuild the server.

When the system goes to initramfs shell, run cat /proc/partitions to verify that the hardware RAID controller has been successfully detected and its logical drive(s) are visible. If the RAID drive that is supposed to contain the LVM PV is not visible, the most likely reason is that the driver for the hardware RAID controller has not been loaded: perhaps the correct module has not been included in initramfs. Try selecting the previous kernel version from the "advanced boot options" GRUB menu: if that works, but the latest kernel fails, then something is wrong with the new kernel's initramfs.

@telcoM thanks for your answer. Seems like the hardware RAID controller can be detected. Could the error still depend on a missing module? If yes, is there any possibility to find out which one?

Arch Linux

I have installed Arch with LVM on a macbook pro. Since I installed /boot inside the LVM, I had to install GRUB2-efi also (the mac uses EFI).

When I boot, I am able to get to the GRUB2 menu but when I try to start Arch, I get this error:

No volume groups found ERROR: Unable to determine major/minor number of root device '(Arch_Linux-boot)'And right before the GRUB2 menu appears, an error message flashes so quickly I can only make out the word "failed".

Arch is in an extended partition (/dev/sda3) with root, boot, var, home and swap on their own separate partitions.

In /etc/default/grub (NOT on the CD), I added "lvm2" to GRUB_PRELOAD_MODULES and added "root=(Arch_Linux-boot)" to GRUB_CMDLINE_LINUX. Arch_Linux is the volume group and boot is the boot partition. During installation, I set USELVM="yes" (in the first configuration file in the configure system phase) and added an lvm2 hook in the mkinitcpio configuration file (during installation also).

Also, when I try to boot OS X (using GRUB2), it seems to boot (spits out some console stuff) then gets stuck. By holding Option at startup and selecting the OS X partition, I can boot into OS X. The same doesn't work with Arch.

My guess is that it's not finding the volume group (Arch_Linux) because I got the configuration file wrong or something.

So is

#Settings I added in /etc/default/grub GRUB_CMDLINE_LINUX="root=(Arch_Linux-boot)" GRUB_PRELOAD_MODULES="lvm2"valid? Or is something wrong here?

Last edited by Splooshie123 (2012-04-03 02:35:54)

vgdisplay and lvdisplay No volume groups found

pvcreate /dev/sdb5 Device /dev/sdb5 not found (or ignored by filtering). fdisk -l Disk /dev/sdb: 250.1 GB, 250059350016 bytes 255 heads, 63 sectors/track, 30401 cylinders, total 488397168 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00050ccb Device Boot Start End Blocks Id System /dev/sdb1 * 2048 499711 248832 83 Linux /dev/sdb2 501758 488396799 243947521 5 Extended /dev/sdb5 501760 488396799 243947520 8e Linux LVM Disk /dev/sda: 250.1 GB, 250059350016 bytes 255 heads, 63 sectors/track, 30401 cylinders, total 488397168 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00050ccb Device Boot Start End Blocks Id System /dev/sda1 * 2048 499711 248832 fd Linux raid autodetect /dev/sda2 501758 488396799 243947521 5 Extended /dev/sda5 501760 488396799 243947520 fd Linux raid autodetect Disk /dev/mapper/ubuntu--server-root: 247.7 GB, 247652679680 bytes 255 heads, 63 sectors/track, 30108 cylinders, total 483696640 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/mapper/ubuntu--server-root doesn't contain a valid partition table Disk /dev/mapper/ubuntu--server-swap_1: 2143 MB, 2143289344 bytes 255 heads, 63 sectors/track, 260 cylinders, total 4186112 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/mapper/ubuntu--server-swap_1 doesn't contain a valid partition table df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/ubuntu--server-root 231G 5,0G 214G 3% / udev 993M 12K 993M 1% /dev tmpfs 401M 356K 401M 1% /run none 5,0M 0 5,0M 0% /run/lock none 1002M 0 1002M 0% /run/shm /dev/sdb1 228M 25M 192M 12% /boot