- 27 Setting Up a KVM VM Host Server #

- 27.1 CPU Support for Virtualization #

- 27.2 Required Software #

- 27.3 KVM Host-Specific Features #

- 27.3.1 Using the Host Storage with virtio-scsi #

- 30 Setting Up a KVM VM Host Server #

- 30.1 CPU Support for Virtualization #

- 30.2 Required Software #

- 30.3 KVM Host-Specific Features #

- 30.3.1 Using the Host Storage with virtio-scsi #

- 30 Setting Up a KVM VM Host Server #

- 30.1 CPU Support for Virtualization #

- 30.2 Required Software #

- 30.3 KVM Host-Specific Features #

- 30.3.1 Using the Host Storage with virtio-scsi #

- 3 Introduction to KVM Virtualization #

- 3.2 KVM Virtualization Architecture #

27 Setting Up a KVM VM Host Server #

This section documents how to set up and use SUSE Linux Enterprise Server 12 SP4 as a QEMU-KVM based virtual machine host.

In general, the virtual guest system needs the same hardware resources as if it were installed on a physical machine. The more guests you plan to run on the host system, the more hardware resources—CPU, disk, memory, and network—you need to add to the VM Host Server.

27.1 CPU Support for Virtualization #

To run KVM, your CPU must support virtualization, and virtualization needs to be enabled in BIOS. The file /proc/cpuinfo includes information about your CPU features.

To find out whether your system supports virtualization, see Section 7.3, “KVM Hardware Requirements”.

27.2 Required Software #

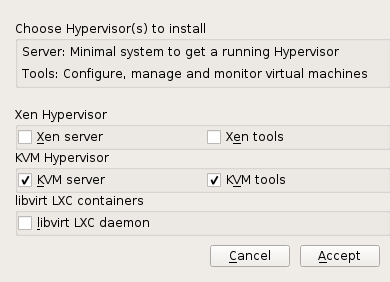

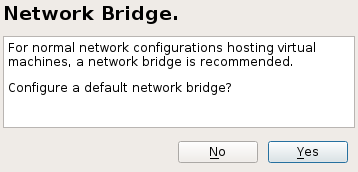

The KVM host requires several packages to be installed. To install all necessary packages, do the following:

- Verify that the yast2-vm package is installed. This package is YaST’s configuration tool that simplifies the installation of virtualization hypervisors.

- Run YaST › Virtualization › Install Hypervisor and Tools .

tux > lsmod | grep kvm kvm_intel 64835 6 kvm 411041 1 kvm_intel27.3 KVM Host-Specific Features #

You can improve the performance of KVM-based VM Guests by letting them fully use specific features of the VM Host Server’s hardware ( paravirtualization ). This section introduces techniques to make the guests access the physical host’s hardware directly—without the emulation layer—to make the most use of it.

Examples included in this section assume basic knowledge of the qemu-system-ARCH command line options. For more information, see Chapter 29, Running Virtual Machines with qemu-system-ARCH.

27.3.1 Using the Host Storage with virtio-scsi #

virtio-scsi is an advanced storage stack for KVM. It replaces the former virtio-blk stack for SCSI devices pass-through. It has several advantages over virtio-blk :

KVM guests have a limited number of PCI controllers, which results in a limited number of possibly attached devices. virtio-scsi solves this limitation by grouping multiple storage devices on a single controller. Each device on a virtio-scsi controller is represented as a logical unit, or LUN .

virtio-blk uses a small set of commands that need to be known to both the virtio-blk driver and the virtual machine monitor, and so introducing a new command requires updating both the driver and the monitor.

By comparison, virtio-scsi does not define commands, but rather a transport protocol for these commands following the industry-standard SCSI specification. This approach is shared with other technologies, such as Fibre Channel, ATAPI, and USB devices.

virtio-blk devices are presented inside the guest as /dev/vdX , which is different from device names in physical systems and may cause migration problems.

virtio-scsi keeps the device names identical to those on physical systems, making the virtual machines easily relocatable.

For virtual disks backed by a whole LUN on the host, it is preferable for the guest to send SCSI commands directly to the LUN (pass-through). This is limited in virtio-blk , as guests need to use the virtio-blk protocol instead of SCSI command pass-through, and, moreover, it is not available for Windows guests. virtio-scsi natively removes these limitations.

30 Setting Up a KVM VM Host Server #

This section documents how to set up and use SUSE Linux Enterprise Server 15 SP2 as a QEMU-KVM based virtual machine host.

In general, the virtual guest system needs the same hardware resources as if it were installed on a physical machine. The more guests you plan to run on the host system, the more hardware resources—CPU, disk, memory, and network—you need to add to the VM Host Server.

30.1 CPU Support for Virtualization #

To run KVM, your CPU must support virtualization, and virtualization needs to be enabled in BIOS. The file /proc/cpuinfo includes information about your CPU features.

To find out whether your system supports virtualization, see Section 6.3, “KVM Hardware Requirements”.

30.2 Required Software #

The KVM host requires several packages to be installed. To install all necessary packages, do the following:

- Verify that the yast2-vm package is installed. This package is YaST’s configuration tool that simplifies the installation of virtualization hypervisors.

- Run YaST › Virtualization › Install Hypervisor and Tools .

> lsmod | grep kvm kvm_intel 64835 6 kvm 411041 1 kvm_intel30.3 KVM Host-Specific Features #

You can improve the performance of KVM-based VM Guests by letting them fully use specific features of the VM Host Server’s hardware ( paravirtualization ). This section introduces techniques to make the guests access the physical host’s hardware directly—without the emulation layer—to make the most use of it.

Examples included in this section assume basic knowledge of the qemu-system-ARCH command line options. For more information, see Chapter 32, Running Virtual Machines with qemu-system-ARCH.

30.3.1 Using the Host Storage with virtio-scsi #

virtio-scsi is an advanced storage stack for KVM. It replaces the former virtio-blk stack for SCSI devices pass-through. It has several advantages over virtio-blk :

KVM guests have a limited number of PCI controllers, which results in a limited number of possibly attached devices. virtio-scsi solves this limitation by grouping multiple storage devices on a single controller. Each device on a virtio-scsi controller is represented as a logical unit, or LUN .

virtio-blk uses a small set of commands that need to be known to both the virtio-blk driver and the virtual machine monitor, and so introducing a new command requires updating both the driver and the monitor.

By comparison, virtio-scsi does not define commands, but rather a transport protocol for these commands following the industry-standard SCSI specification. This approach is shared with other technologies, such as Fibre Channel, ATAPI, and USB devices.

virtio-blk devices are presented inside the guest as /dev/vdX , which is different from device names in physical systems and may cause migration problems.

virtio-scsi keeps the device names identical to those on physical systems, making the virtual machines easily relocatable.

For virtual disks backed by a whole LUN on the host, it is preferable for the guest to send SCSI commands directly to the LUN (pass-through). This is limited in virtio-blk , as guests need to use the virtio-blk protocol instead of SCSI command pass-through, and, moreover, it is not available for Windows guests. virtio-scsi natively removes these limitations.

30 Setting Up a KVM VM Host Server #

This section documents how to set up and use SUSE Linux Enterprise Server 15 SP1 as a QEMU-KVM based virtual machine host.

In general, the virtual guest system needs the same hardware resources as if it were installed on a physical machine. The more guests you plan to run on the host system, the more hardware resources—CPU, disk, memory, and network—you need to add to the VM Host Server.

30.1 CPU Support for Virtualization #

To run KVM, your CPU must support virtualization, and virtualization needs to be enabled in BIOS. The file /proc/cpuinfo includes information about your CPU features.

To find out whether your system supports virtualization, see Section 7.3, “KVM Hardware Requirements”.

30.2 Required Software #

The KVM host requires several packages to be installed. To install all necessary packages, do the following:

- Verify that the yast2-vm package is installed. This package is YaST’s configuration tool that simplifies the installation of virtualization hypervisors.

- Run YaST › Virtualization › Install Hypervisor and Tools .

> lsmod | grep kvm kvm_intel 64835 6 kvm 411041 1 kvm_intel30.3 KVM Host-Specific Features #

You can improve the performance of KVM-based VM Guests by letting them fully use specific features of the VM Host Server’s hardware ( paravirtualization ). This section introduces techniques to make the guests access the physical host’s hardware directly—without the emulation layer—to make the most use of it.

Examples included in this section assume basic knowledge of the qemu-system-ARCH command line options. For more information, see Chapter 32, Running Virtual Machines with qemu-system-ARCH.

30.3.1 Using the Host Storage with virtio-scsi #

virtio-scsi is an advanced storage stack for KVM. It replaces the former virtio-blk stack for SCSI devices pass-through. It has several advantages over virtio-blk :

KVM guests have a limited number of PCI controllers, which results in a limited number of possibly attached devices. virtio-scsi solves this limitation by grouping multiple storage devices on a single controller. Each device on a virtio-scsi controller is represented as a logical unit, or LUN .

virtio-blk uses a small set of commands that need to be known to both the virtio-blk driver and the virtual machine monitor, and so introducing a new command requires updating both the driver and the monitor.

By comparison, virtio-scsi does not define commands, but rather a transport protocol for these commands following the industry-standard SCSI specification. This approach is shared with other technologies, such as Fibre Channel, ATAPI, and USB devices.

virtio-blk devices are presented inside the guest as /dev/vdX , which is different from device names in physical systems and may cause migration problems.

virtio-scsi keeps the device names identical to those on physical systems, making the virtual machines easily relocatable.

For virtual disks backed by a whole LUN on the host, it is preferable for the guest to send SCSI commands directly to the LUN (pass-through). This is limited in virtio-blk , as guests need to use the virtio-blk protocol instead of SCSI command pass-through, and, moreover, it is not available for Windows guests. virtio-scsi natively removes these limitations.

3 Introduction to KVM Virtualization #

KVM is a full virtualization solution for the AMD64/Intel 64 and the IBM Z architectures supporting hardware virtualization.

VM Guests (virtual machines), virtual storage, and virtual networks can be managed with QEMU tools directly, or with the libvirt -based stack. The QEMU tools include qemu-system-ARCH , the QEMU monitor, qemu-img , and qemu-ndb . A libvirt -based stack includes libvirt itself, along with libvirt -based applications such as virsh , virt-manager , virt-install , and virt-viewer .

3.2 KVM Virtualization Architecture #

This full virtualization solution consists of two main components:

- A set of kernel modules ( kvm.ko , kvm-intel.ko , and kvm-amd.ko ) that provides the core virtualization infrastructure and processor-specific drivers.

- A user space program ( qemu-system-ARCH ) that provides emulation for virtual devices and control mechanisms to manage VM Guests (virtual machines).

The term KVM more properly refers to the kernel level virtualization functionality, but is in practice more commonly used to refer to the user space component.

QEMU can provide certain Hyper-V hypercalls for Windows* guests to partly emulate a Hyper-V environment. This can be used to achieve better behavior for Windows* guests that are Hyper-V enabled.