- Different CPU Times: Unix/Linux ‘top’

- User CPU Time and System CPU Time

- Nice CPU Time

- Idle CPU Time

- Waiting CPU Time

- Software Interrupt CPU Time

- Hardware Interrupt CPU Time

- Stolen CPU Time

- How to find all these CPU Times?

- What specifically are wall-clock-time, user-cpu-time, and system-cpu-time in Unix?

- 4 Answers 4

- How are CPU time and CPU usage the same?

- 2 Answers 2

Different CPU Times: Unix/Linux ‘top’

Join the DZone community and get the full member experience.

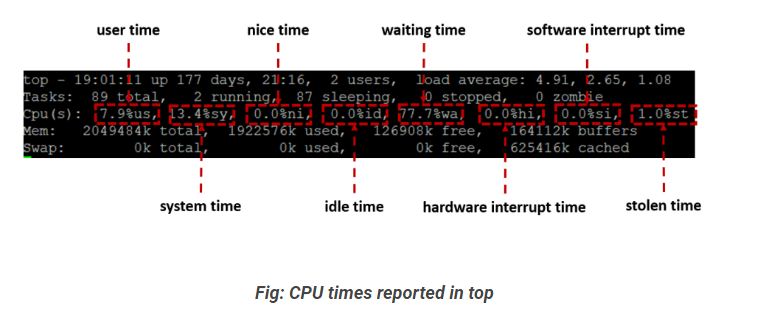

CPU consumption in Unix/Linux operating systems is studied using eight different metrics: User CPU time, System CPU time, nice CPU time, Idle CPU time, Waiting CPU time, Hardware Interrupt CPU time, Software Interrupt CPU time, Stolen CPU time. Let’s review each of the CPU time in this article.

User CPU Time and System CPU Time

In order to understand ‘user’ CPU Time, one should understand ‘system’ CPU time as well, since they go hand in hand. User CPU time is the amount of time the processor spends in running your application code. System CPU Time is the amount of time the processor spends in running the operating system(i.e., kernel) functions connected to your application. Let’s say your application is manipulating the elements in an array; then, it will be accounted as ‘user’ CPU time. Let’s say your application is making network calls to external applications. To make network calls, it has to read/write data into socket buffers which is part of the operating system code. This will be accounted as ‘system’ CPU time. To learn how to resolve high ‘user’ CPU time, refer to this article. To learn how to resolve high ‘system’ CPU time, refer to this article.

Nice CPU Time

To understand ‘nice’ CPU time, one must first understand ‘nice’. When there is a CPU contention (i.e., multiple processes contend for CPU cycles), the process with high priority are given more chances to run. In Unix/Linux operating systems, processes are launched with a priority 0 (default). However, using the ‘nice’ command, super users (like ‘root’) can set the priority for the processes as shown below:

nice -n 15 java -jar buggApp.jar Priority can range from -20 to 19. -20 is the highest priority with which a process can be launched. 19 is the lowest priority. For more options on how to launch a process with nice priority or change the priority level using the renice command refer to this article.

‘nice CPU time’ is the amount of time the CPU spends running the low priority processes (whose nice values are greater than 0). To learn how to resolve high ‘nice’ CPU time, refer to this article.

Idle CPU Time

Idle CPU time is the amount of time the CPU was not busy. Idle time is typically used to measure the unused CPU capacity.

Many operating systems run a special task by name: ‘idle task’ on the CPU when there is nothing for the CPU to do. This idle task is implemented with the lowest possible priority. An advantage of this approach is that monitoring applications can keep track of this idle task along with other tasks. To learn more details about ‘idle’ CPU time refer to this article.

Waiting CPU Time

Waiting CPU time indicates the amount of time CPU is waiting for the disk I/O or network I/O operations to complete. High waiting time indicates that the CPU is *stranded* because of the I/O operations on that device. For optimal performance, one should aim to keep the I/O waiting CPU time as low as possible. If the waiting time is > 10% then it is worth investigating it.

You can visualize I/O waiting time through this analogy: Say there are hundreds of cars/bikes are waiting on a busy road for the traffic light to switch from ‘red’ to ‘green’. But due to some technical glitch, it takes a long time for the traffic light to switch from ‘red’ to ‘green’ — then those hundreds of cars/bikes would get stranded unnecessarily. It will result in several undesirable side effects: passengers will reach their destination late, drivers can get frustrated and start to horn (noise pollution) since engines are on fuel will be wasted (air pollution)… To learn how to resolve high ‘waiting’ CPU time, refer to this article.

Software Interrupt CPU Time

To understand the ‘software interrupt time’, one must understand ‘software interrupts’ first. Software interrupts can be triggered because of the following events:

a. When software programs run into an exceptional condition such as a divide by zero, accessing unavailable memory

b. When special Instruction in the application code causes an interrupt when the processor executes it

c. Some hardware interrupts might take a long time to complete. In such scenarios, hardware interrupts can be deferred to execute at a later point of time through software interrupts. Software interrupts can be scheduled to run independently or on any CPU in the device or even run concurrently. On the other hand, these flexibilities don’t exist in hardware interrupts.

The amount of time the CPU spends in processing, these software interrupt signals are called ‘Software interrupt time’. To learn how to resolve high ‘Software Interrupt’ CPU time, refer to this article.

Hardware Interrupt CPU Time

To understand ‘hardware interrupt time’, one must understand ‘hardware interrupts’ first. A hardware interrupt is an electronic alerting signal sent to the processor from an external device (like disk controller, network cards, keyboard controller, external timer, hardware sensors, ….) For example, when we press a key on the keyboard or move the mouse, they trigger ‘hardware interrupts which cause the processor to read the keystroke or mouse position.

The amount of time the CPU spends in processing these hardware interrupt signals is called ‘hardware interrupt time’. To learn how to resolve high ‘hardware interrupt’ CPU time, refer to this article.

Stolen CPU Time

‘Steal’ time (also known as ‘Stolen’ time) is relevant only in cloud environments (like AWS) or VMWare environments, where multiple virtual machines will be run on one underlying physical host. In such circumstances, CPU resources will be shared amongst the multiple virtual machines. The hypervisor is a technology that will distribute the underlying physical host’s CPU resources and other resources amongst the virtual machines.

Steal time (or stolen time) is the percentage of time a virtual machine CPU waits for a real CPU while the hypervisor is servicing other virtual machines. If Steal time is high on a particular virtual machine, it indicates that the virtual machine runs on an overloaded physical host. Companies like Netflix monitor the stolen CPU time closely. If it goes beyond a threshold, then the virtual machine will be shut down from that physical host and relaunched in another physical host. To learn how to resolve high stolen CPU time, refer to this article.

How to find all these CPU Times?

All these CPU time can be found from the following sources:

a. You can use web-based root cause analysis tools to report all these CPU times. It is capable of generating alerts if a CPU time goes beyond the threshold.

b. All these CPU times are also reported in the Unix/Linux command line tool ‘top’ as highlighted in the below image.

CPU time operating system application Virtual Machine Machine Host (Unix) Software IDLE (Python) Network Task (computing)

Opinions expressed by DZone contributors are their own.

What specifically are wall-clock-time, user-cpu-time, and system-cpu-time in Unix?

I can take a guess based on the names, but what specifically are wall-clock-time, user-cpu-time, and system-cpu-time in Unix? Is user-cpu time the amount of time spent executing user-code while kernel-cpu time the amount of time spent in the kernel due to the need of privileged operations (like I/O to disk)? What unit of time is this measurement in? And is wall-clock time really the number of seconds the process has spent on the CPU or is the name just misleading?

4 Answers 4

Wall-clock time is the time that a clock on the wall (or a stopwatch in hand) would measure as having elapsed between the start of the process and ‘now’.

The user-cpu time and system-cpu time are pretty much as you said — the amount of time spent in user code and the amount of time spent in kernel code.

The units are seconds (and subseconds, which might be microseconds or nanoseconds).

The wall-clock time is not the number of seconds that the process has spent on the CPU; it is the elapsed time, including time spent waiting for its turn on the CPU (while other processes get to run).

@Pacerier: on a single core machine, yes, but multi-core machines and multi-threaded programs can use more than 1 CPU second per elapsed second.

@JonathanLeffler thank you for the answer, I wanted to get the number of nanoseconds that has been elapsed but calculalting the CPU time using the formula CPUtime = #clock_cycles / clock_rate cannot be the same as calculating the elapsed time. Do you know if I can get the elapsed time from the CPU time?

@Bionix1441: You cannot derive elapsed time from CPU time for a number of reasons. First, a process can be idle, not consuming any CPU time, for arbitrary periods (for example, a daemon process waiting for a client to connect to it over the network), so it may do nothing for days at a time of elapsed time. Second, if it is running, it may have multiple threads, and if it has, say, 4 threads and there are 4 or more cores on the system, it might rack up 4 CPU seconds of expended effort per second of elapsed time. These show that there’s no simple (or even complex) formula that you could use.

@Catbuilts: Are you aware that the Unix kernel runs separately from user programs. When your program makes a system call (for example, read() or getpid() ), the kernel executes code on behalf of your program. The kernel also handles pre-emptive multi-tasking so that other programs get their turn to run, and does some general housekeeping work to keep the system running smoothly. This code is executed in ‘kernel code’ (also in ‘kernel mode’). This is distinct from the code you wrote, and the user libraries (including the system C library) that you run.

How are CPU time and CPU usage the same?

I don’t understand how a time duration can be replaced by a percentage. When I look at top , doesn’t %CPU tell me that MATLAB is using 2.17 of my cores?

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 18118 jasl 20 0 9248400 261528 78676 S 217.2 0.1 8:14.75 MATLAB Question In order to better understand what CPU usage is, how do I calculate the CPU usage myself?

Let Linux show you how busy each Processor is with this Command Line request. mpstat -P ALL 5 3 enter for multiprocessor status 5 seconds 3 intervals. Divide the %CPU reported by your number of cores to get average CPU Busy %. iostat -xm 5 3 enter will tell you how many cores/CPU you have available.

2 Answers 2

CPU time is allocated in discrete time slices (ticks). For a certain number of time slices, the CPU is busy, other times it is not (which is represented by the idle process). In the picture below the CPU is busy for 6 of the 10 CPU slices. 6/10 = .60 = 60% of busy time (and there would therefore be 40% idle time).

A percentage is defined as «a number or rate that is expressed as a certain number of parts of something divided into 100 parts». So in this case, those parts are discrete slices of time and the something is busy time slices vs idle time slices — the rate of busy to idle time slices.

Since CPUs operate in GHz (billions of cycles a second). The operating system slices that time in smaller units called ticks. They are not really 1/10 of a second. The tick rate in windows is 10 million ticks in a second and in Linux it is sysconf(_SC_CLK_TCK) (usually 100 ticks per second).

In something like top , the busy CPU cycles are then further broken down into percentages of things like user time and system time. In top on Linux and perfmon in Windows, you will often get a display that goes over 100%, that is because the total is 100% * the_number_of_cpu_cores.

In an operating system, it is the scheduler’s job to allocate these precious slices to processes, so the scheduler is what reports this.