- Why is number of open files limited in Linux?

- 3 Answers 3

- How to set ulimit and file descriptors limit on Linux Servers

- To see what is the present open file limit in any Linux System

- ulimit command :

- How to fix the problem when limit on number of Maximum Files was reached ?

- Set User level resource limit via limit.conf file

- 4 thoughts on “How to set ulimit and file descriptors limit on Linux Servers”

- Leave a Comment Cancel reply

- Recent Posts

- Pages

Why is number of open files limited in Linux?

My question is: Why is there a limit of open files in Linux?

Well, process limits and file limits are important so things like fork bombs don’t break a server/computer for all users, only the user that does it and only temporarily. Otherwise, someone on a shared server could set off a forkbomb and completely knock it down for all users, not just themselves.

@Rob, a fork bomb doesn’t have anything to do with it since the file limit is per process and each time you fork it does not open a new file handle.

3 Answers 3

The reason is that the operating system needs memory to manage each open file, and memory is a limited resource — especially on embedded systems.

As root user you can change the maximum of the open files count per process (via ulimit -n ) and per system (e.g. echo 800000 > /proc/sys/fs/file-max ).

There is also a security reason : if there were no limits, a userland software would be able to create files endlessly until the server goes down.

@Coren The here discussed limits are only for the count of open file handlers. As a program can also close file handlers, it could create as many files and as big as it want, until all available disk space is full. To prevent this, you can use disk quotas or separated partitions. You are true in the sense, that one aspect of security is preventing resource exhaustion — and for this there are limits.

@jofel Thanks. I guess that opened file handles are represented by instances of struct file, and size of this struct is quite small (bytes level), so can I set /. /file-max with a quite big value as long as memory is not used up?

@xanpeng I am not a kernel expert, but as far as I can see, the default for file-max seems to be RAM size divided by 10k. As the real memory used per file handler should be much smaller (size of struct file plus some driver dependent memory), this seems a quite conservative limit.

The max you can set it to is 2^63-1: echo 9223372036854775807 > /proc/sys/fs/file-max . Don’t know why Linux is using signed integers.

Please note that lsof | wc -l sums up a lot of duplicated entries (forked processes can share file handles etc). That number could be much higher than the limit set in /proc/sys/fs/file-max .

To get the current number of open files from the Linux kernel’s point of view, do this:

Example: This server has 40096 out of max 65536 open files, although lsof reports a much larger number:

# cat /proc/sys/fs/file-max 65536 # cat /proc/sys/fs/file-nr 40096 0 65536 # lsof | wc -l 521504 As lsof will report many files twice or more, such as /dev/null , you can try a best guess with: lsof|awk ‘

you may use lsof|awk ‘!a[$NF]++

Very old question but i have bene looking into these settings on my server and lsof | wc -l give 40,000 while file-nr says 2300 — is that discrepancy normal?

I think it’s largely for historical reasons.

A Unix file descriptor is a small int value, returned by functions like open and creat , and passed to read , write , close , and so forth.

At least in early versions of Unix, a file descriptor was simply an index into a fixed-size per-process array of structures, where each structure contains information about an open file. If I recall correctly, some early systems limited the size of this table to 20 or so.

More modern systems have higher limits, but have kept the same general scheme, largely out of inertia.

20 was the Solaris limit for C language FILE data structures. The file handle count was always larger.

@Lothar: Interesting. I wonder why the limits would differ. Given the fileno and fdopen functions I’d expect them to be nearly interchangeable.

A unix file is more than just the file handle (int) returned. There are disk buffers, and a file control block that defines the current file offset, file owner, permissions, inode, etc.

@ChuckCottrill: Yes, of course. But most of that information has to be stored whether a file is accessed via an int descriptor or a FILE* . If you have more than 20 files open via open() , would fdopen() fail?

How to set ulimit and file descriptors limit on Linux Servers

Introduction: Challenges like number of open files in any of the production environment has become common now a day. Since many applications which are Java based and Apache based, are getting installed and configured, which may lead to too many open files, file descriptors etc. If this exceeds the default limit that is set, then one may face access control problems and file opening challenges. Many production environments come to standstill kind of situations because of this.

Luckily, we have “ulimit” command in any of the Linux based server, by which one can see/set/get number of files open status/configuration details. This command is equipped with many options and with this combination one can set number of open files. Following are step-by-step commands with examples explained in detail.

To see what is the present open file limit in any Linux System

To get open file limit on any Linux server, execute the following command,

[[email protected] ~]# cat /proc/sys/fs/file-max 146013

The above number shows that user can open ‘146013’ file per user login session.

[[email protected] ~]# cat /proc/sys/fs/file-max 149219 [[email protected] ~]# cat /proc/sys/fs/file-max 73906

This clearly indicates that individual Linux operating systems have different number of open files. This is based on dependencies and applications which are running in respective systems.

ulimit command :

As the name suggests, ulimit (user limit) is used to display and set resources limit for logged in user.When we run ulimit command with -a option then it will print all resources’ limit for the logged in user. Now let’s run “ulimit -a” on Ubuntu / Debian and CentOS systems,

Ubuntu / Debian System,

[email protected] ~>$ ulimit -a core file size (blocks, -c) 0 data seg size (kbytes, -d) unlimited scheduling priority (-e) 0 file size (blocks, -f) unlimited pending signals (-i) 5731 max locked memory (kbytes, -l) 64 max memory size (kbytes, -m) unlimited open files (-n) 1024 pipe size (512 bytes, -p) 8 POSIX message queues (bytes, -q) 819200 real-time priority (-r) 0 stack size (kbytes, -s) 8192 cpu time (seconds, -t) unlimited max user processes (-u) 5731 virtual memory (kbytes, -v) unlimited file locks (-x) unlimited

CentOS System

[email protected] ~>$ ulimit -a core file size (blocks, -c) 0 data seg size (kbytes, -d) unlimited scheduling priority (-e) 0 file size (blocks, -f) unlimited pending signals (-i) 5901 max locked memory (kbytes, -l) 64 max memory size (kbytes, -m) unlimited open files (-n) 1024 pipe size (512 bytes, -p) 8 POSIX message queues (bytes, -q) 819200 real-time priority (-r) 0 stack size (kbytes, -s) 8192 cpu time (seconds, -t) unlimited max user processes (-u) 5901 virtual memory (kbytes, -v) unlimited file locks (-x) unlimited

As we can be seen here different OS have different limits set. All these limits can be configured/changed using “ulimit” command.

To display the individual resource limit then pass the individual parameter in ulimit command, some of parameters are listed below:

- ulimit -n –> It will display number of open files limit

- ulimit -c –> It display the size of core file

- umilit -u –> It will display the maximum user process limit for the logged in user.

- ulimit -f –> It will display the maximum file size that the user can have.

- umilit -m –> It will display the maximum memory size for logged in user.

- ulimit -v –> It will display the maximum memory size limit

Use below commands check hard and soft limits for number of open file for the logged in user

How to fix the problem when limit on number of Maximum Files was reached ?

Let’s assume our Linux server has reached the limit of maximum number of open files and want to extend that limit system wide, for example we want to set 100000 as limit of number of open files.

Use sysctl command to pass fs.file-max parameter to kernel on the fly, execute beneath command as root user,

[email protected]~]# sysctl -w fs.file-max=100000 fs.file-max = 100000

Above changes will be active until the next reboot, so to make these changes persistent across the reboot, edit the file /etc/sysctl.conf and add same parameter,

[email protected]~]# vi /etc/sysctl.conf fs.file-max = 100000

Run the beneath command to make above changes into effect immediately without logout and reboot.

Now verify whether new changes are in effect or not.

[email protected]~]# cat /proc/sys/fs/file-max 100000

Use below command to find out how many file descriptors are currently being utilized:

[r[email protected] ~]# more /proc/sys/fs/file-nr 1216 0 100000

Note:- Command “sysctl -p” is used to commit the changes without reboot and logout.

Set User level resource limit via limit.conf file

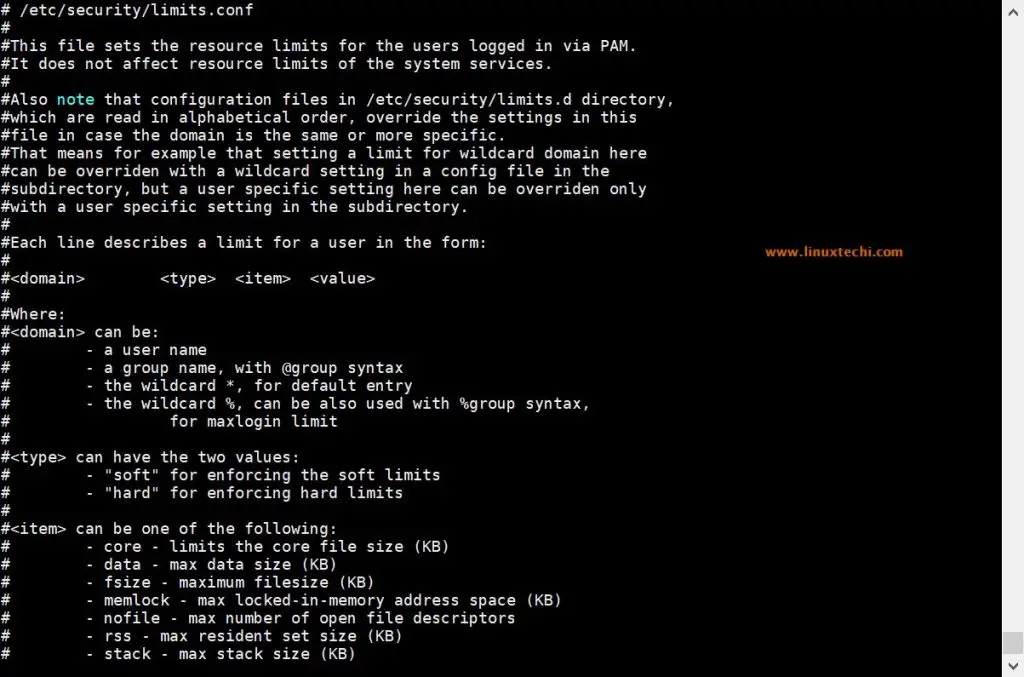

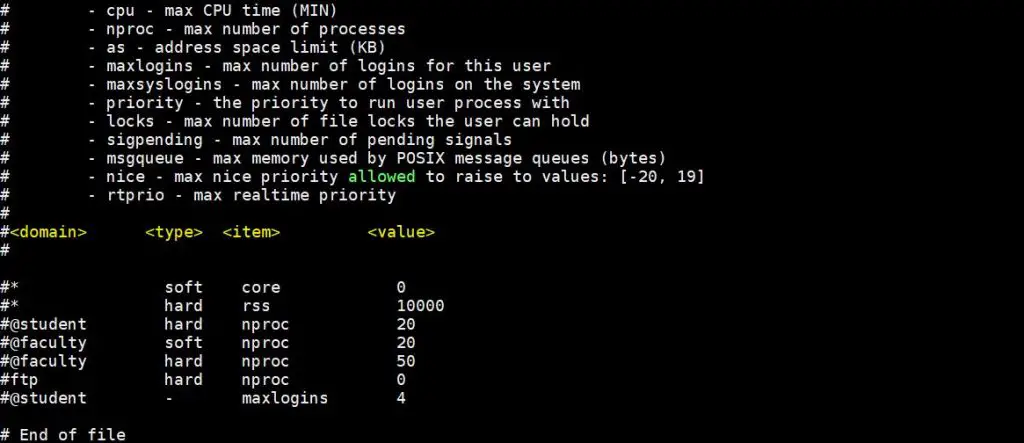

“/etc/sysctl.conf” file is used to set resource limit system wide but if you want to set resource limit for specific user like Oracle, MariaDB and Apache then this can be achieved via “/etc/security/limits.conf” file.

Sample Limit.conf is shown below,

Let’s assume we want to set hard and soft limit on number of open files for linuxtechi user and for oracle user set hard and soft limit on number of open process, edit the file “/etc/security/limits.conf” and add the following lines

# hard limit for max opened files for linuxtechi user linuxtechi hard nofile 4096 # soft limit for max opened files for linuxtechi user linuxtechi soft nofile 1024 # hard limit for max number of process for oracle user oracle hard nproc 8096 # soft limit for max number of process for oracle user oracle soft nproc 4096

Note: In case you want to put resource limit on a group instead of users, then it can also be possible via limit.conf file, in place of user name , type @ and rest of the items will be same, example is shown below,

# hard limit for max opened files for sysadmin group @sysadmin hard nofile 4096 # soft limit for max opened files for sysadmin group @sysadmin soft nofile 1024

Verify whether new changes are in effect or not,

~]# su - linuxtechi ~]$ ulimit -n -H 4096 ~]$ ulimit -n -S 1024 ~]# su - oracle ~]$ ulimit -H -u 8096 ~]$ ulimit -S -u 4096

Note: Other majorly used command is “ lsof ” which is used for finding out “how many files are opened currently”. This command is very helpful for admins.

As mentioned in the introduction section “ulimit” command is very powerful and helps one to configure and make sure application installations are smoother without any bottlenecks. This command helps in fixing many of the number of file limitations in Linux based servers.

4 thoughts on “How to set ulimit and file descriptors limit on Linux Servers”

cat /proc/sys/fs/file-max shows 146013, but ulimit -a shows:

open files (-n) 1024 What is the difference Reply

cat /proc/sys/fs/file-max –> shows the limit of max open file for the system wide ulimit -a –> will show all the resources limit of a specific user Reply

hello, ok so do you mean that if I set a value like this it will be add to limits.conf?

ulimit -f 65000 Reply

Hi David, User level limits are configured via ‘/etc/security/limits.conf’ file. Example is shown in above guide. Reply

Leave a Comment Cancel reply

Recent Posts

- How to Install PHP 8 on RHEL 8 / Rocky Linux 8 / CentOS 8

- How to Install and Use Wireshark in Ubuntu 22.04

- Top 10 Things to Do After Installing Debian 12 (Bookworm)

- How to Install Debian 12 (Bookworm) Step-by-Step

- How to Upgrade Debian 11 to Debian 12 (Bookworm) via CLI

- How to Setup Dynamic NFS Provisioning in Kubernetes Cluster

- How to Install Nagios on Rocky Linux 9 / Alma Linux 9

- How to Install Ansible AWX on Kubernetes Cluster

- How to Install Docker on Fedora 38/37 Step-by-Step

- How to Setup High Availability Apache (HTTP) Cluster on RHEL 9/8